2026

|

| Wenfang Sun, Hao Chen, Yingjun Du, Yefeng Zheng, Cees G M Snoek: RegionReasoner: Region-Grounded Multi-Round Visual Reasoning. In: ICLR, 2026. @inproceedings{SunICLR2026,

title = {RegionReasoner: Region-Grounded Multi-Round Visual Reasoning},

author = {Wenfang Sun and Hao Chen and Yingjun Du and Yefeng Zheng and Cees G M Snoek},

year = {2026},

date = {2026-04-24},

booktitle = {ICLR},

abstract = {Large vision-language models have achieved remarkable progress in visual reasoning, yet most existing systems rely on single-step or text-only reasoning, limiting their ability to iteratively refine understanding across multiple visual contexts. To address this limitation, we introduce a new multi-round visual reasoning benchmark with training and test sets spanning both detection and segmentation tasks, enabling systematic evaluation under iterative reasoning scenarios. We further propose RegionReasoner, a reinforcement learning framework that enforces grounded reasoning by requiring each reasoning trace to explicitly cite the corresponding reference bounding boxes, while maintaining semantic coherence via a global–local consistency reward. This reward extracts key objects and nouns from both global scene captions and region-level captions, aligning them with the reasoning trace to ensure consistency across reasoning steps. RegionReasoner is optimized with structured rewards combining grounding fidelity and global–local semantic alignment. Experiments on detection and segmentation tasks show that RegionReasoner-7B, together with our newly introduced benchmark RegionDial-Bench, considerably improves multi-round reasoning accuracy, spatial grounding precision, and global–local consistency, establishing a strong baseline for this emerging research direction.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Large vision-language models have achieved remarkable progress in visual reasoning, yet most existing systems rely on single-step or text-only reasoning, limiting their ability to iteratively refine understanding across multiple visual contexts. To address this limitation, we introduce a new multi-round visual reasoning benchmark with training and test sets spanning both detection and segmentation tasks, enabling systematic evaluation under iterative reasoning scenarios. We further propose RegionReasoner, a reinforcement learning framework that enforces grounded reasoning by requiring each reasoning trace to explicitly cite the corresponding reference bounding boxes, while maintaining semantic coherence via a global–local consistency reward. This reward extracts key objects and nouns from both global scene captions and region-level captions, aligning them with the reasoning trace to ensure consistency across reasoning steps. RegionReasoner is optimized with structured rewards combining grounding fidelity and global–local semantic alignment. Experiments on detection and segmentation tasks show that RegionReasoner-7B, together with our newly introduced benchmark RegionDial-Bench, considerably improves multi-round reasoning accuracy, spatial grounding precision, and global–local consistency, establishing a strong baseline for this emerging research direction. |

| Răzvan-Andrei Matişan, Vincent Tao Hu, Grigory Bartosh, Björn Ommer, Cees G M Snoek, Max Welling, Jan-Willem van de Meent, Mohammad Mahdi Derakhshani, Floor Eijkelboom: Purrception: Variational Flow Matching for Vector-Quantized Image Generation. In: ICLR, 2026. @inproceedings{MatisanICLR2026,

title = {Purrception: Variational Flow Matching for Vector-Quantized Image Generation},

author = {Răzvan-Andrei Matişan and Vincent Tao Hu and Grigory Bartosh and Björn Ommer and Cees G M Snoek and Max Welling and Jan-Willem van de Meent and Mohammad Mahdi Derakhshani and Floor Eijkelboom},

url = {https://arxiv.org/abs/2510.01478},

year = {2026},

date = {2026-04-23},

urldate = {2025-10-01},

booktitle = {ICLR},

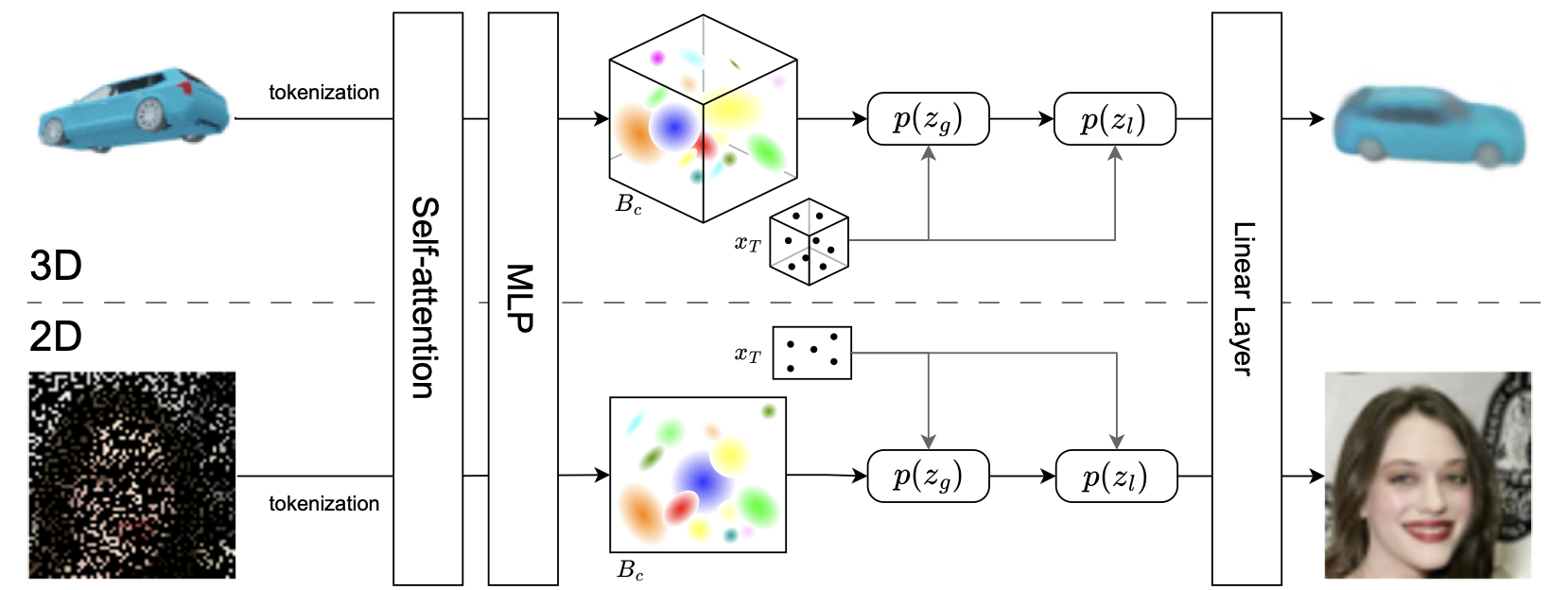

abstract = {We introduce Purrception, a variational flow matching approach for vector-quantized image generation that provides explicit categorical supervision while maintaining continuous transport dynamics. Our method adapts Variational Flow Matching to vector-quantized latents by learning categorical posteriors over codebook indices while computing velocity fields in the continuous embedding space. This combines the geometric awareness of continuous methods with the discrete supervision of categorical approaches, enabling uncertainty quantification over plausible codes and temperature-controlled generation. We evaluate Purrception on ImageNet-1k 256x256 generation. Training converges faster than both continuous flow matching and discrete flow matching baselines while achieving competitive FID scores with state-of-the-art models. This demonstrates that Variational Flow Matching can effectively bridge continuous transport and discrete supervision for improved training efficiency in image generation.},

howpublished = {arXiv:2510.01478},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

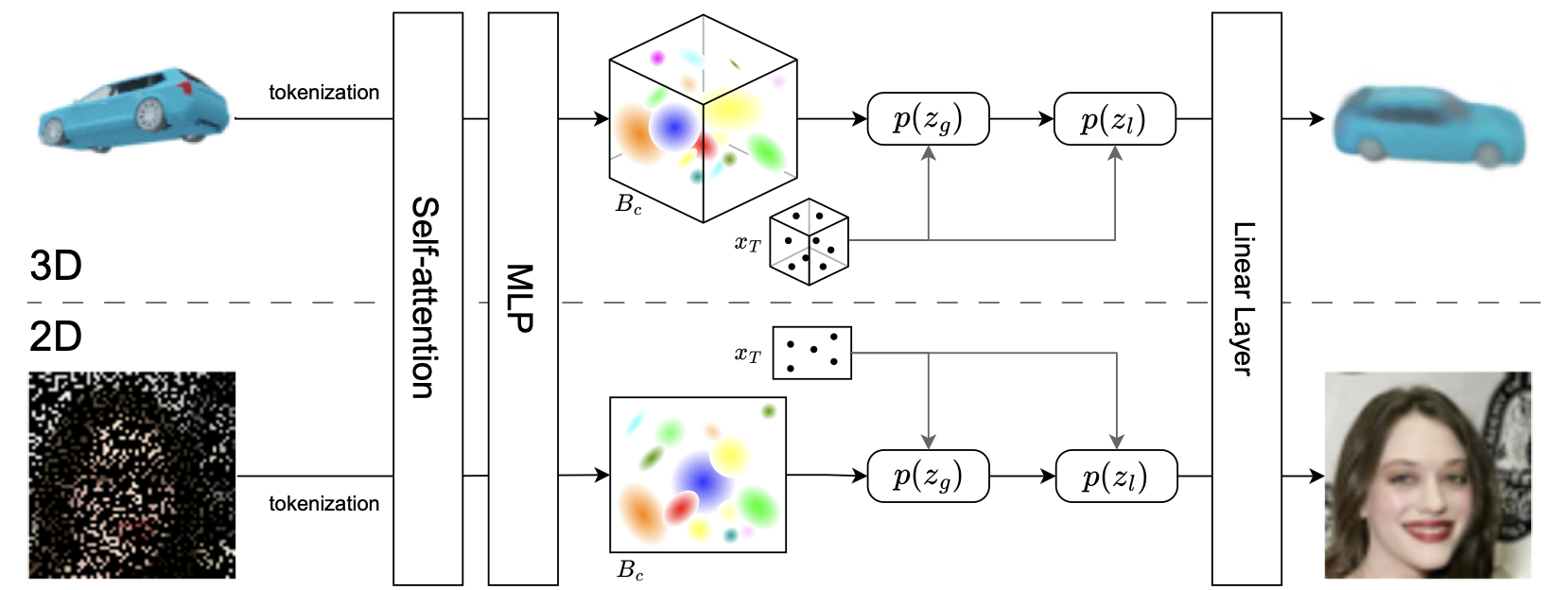

We introduce Purrception, a variational flow matching approach for vector-quantized image generation that provides explicit categorical supervision while maintaining continuous transport dynamics. Our method adapts Variational Flow Matching to vector-quantized latents by learning categorical posteriors over codebook indices while computing velocity fields in the continuous embedding space. This combines the geometric awareness of continuous methods with the discrete supervision of categorical approaches, enabling uncertainty quantification over plausible codes and temperature-controlled generation. We evaluate Purrception on ImageNet-1k 256x256 generation. Training converges faster than both continuous flow matching and discrete flow matching baselines while achieving competitive FID scores with state-of-the-art models. This demonstrates that Variational Flow Matching can effectively bridge continuous transport and discrete supervision for improved training efficiency in image generation. |

| Aritra Bhowmik, Denis Korzhenkov, Cees G M Snoek, Amirhossein Habibian, Mohsen Ghafoorian: MoAlign: Motion-Centric Representation Alignment for Video Diffusion Models. In: ICLR, 2026. @inproceedings{BhowmikICLR2026,

title = {MoAlign: Motion-Centric Representation Alignment for Video Diffusion Models},

author = {Aritra Bhowmik and Denis Korzhenkov and Cees G M Snoek and Amirhossein Habibian and Mohsen Ghafoorian},

url = {https://arxiv.org/abs/2510.19022},

year = {2026},

date = {2026-04-23},

urldate = {2025-10-21},

booktitle = {ICLR},

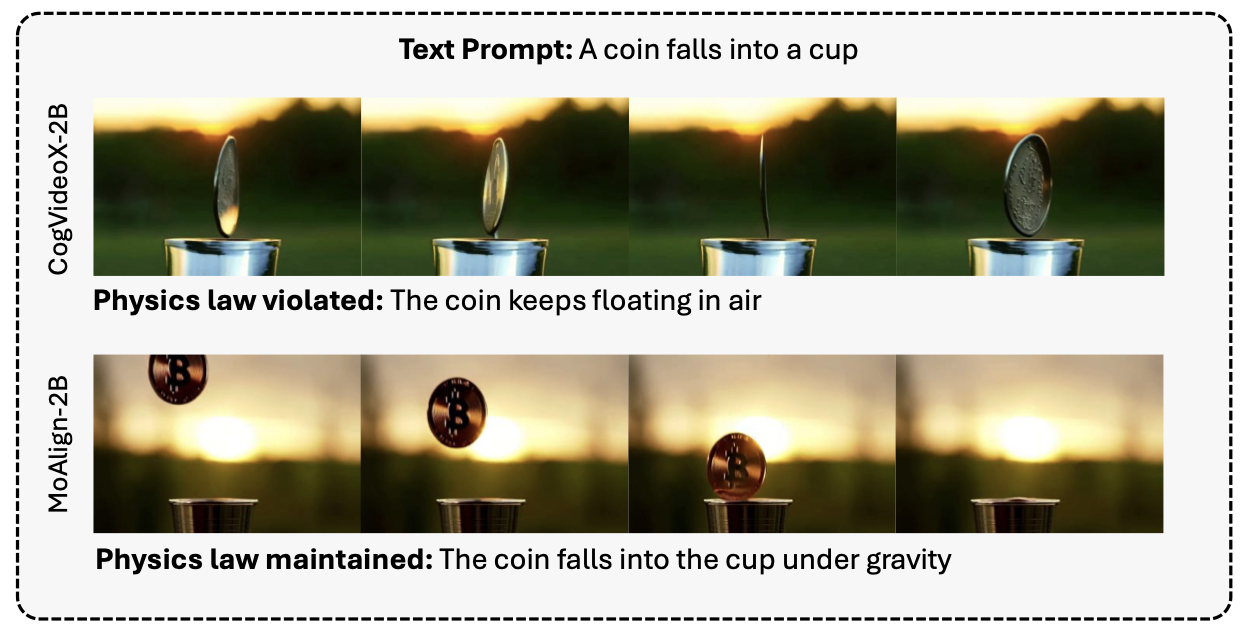

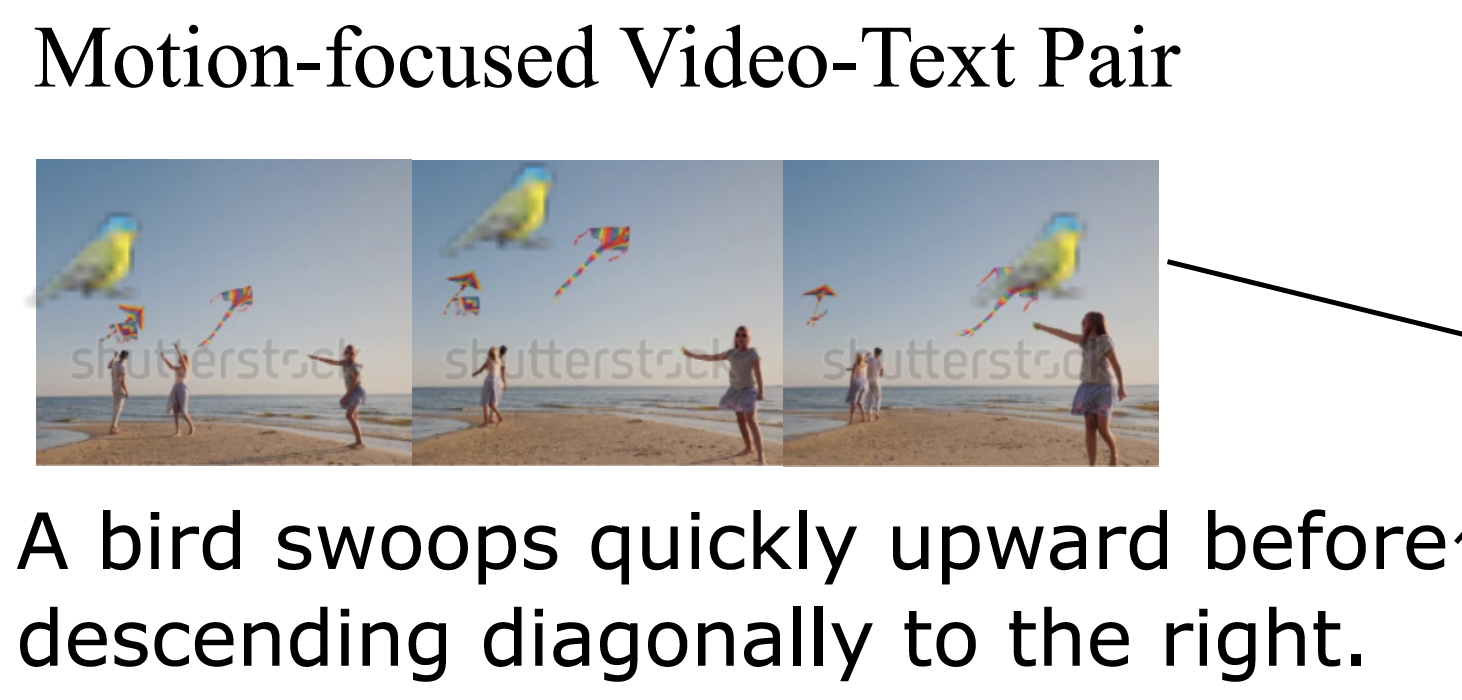

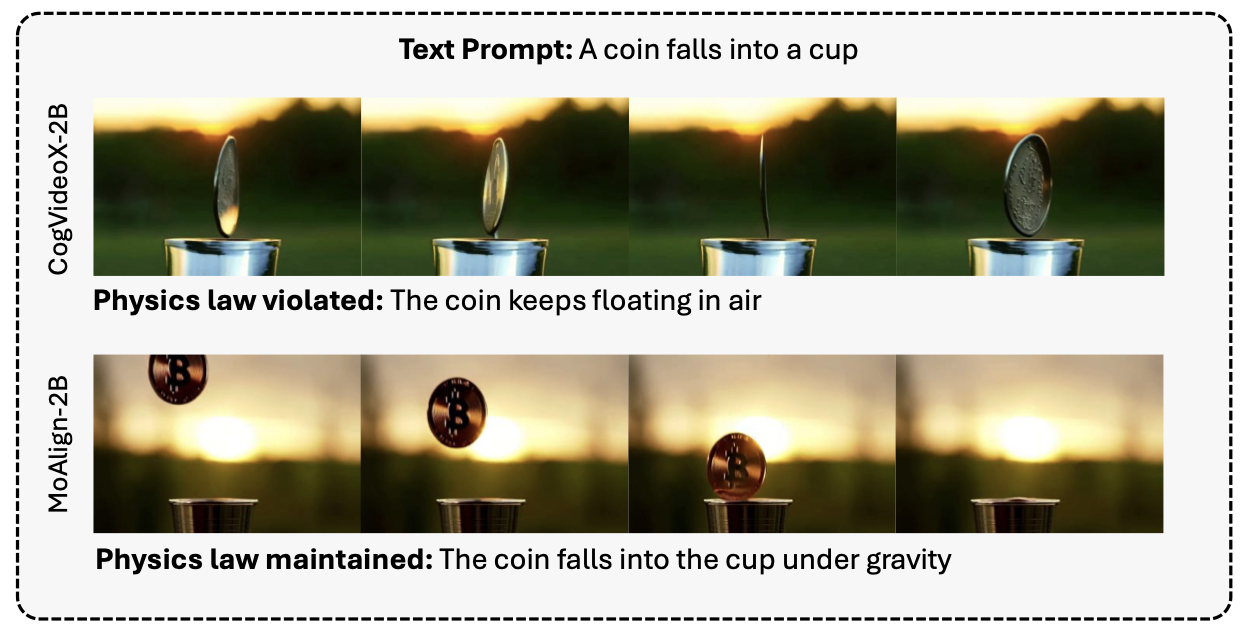

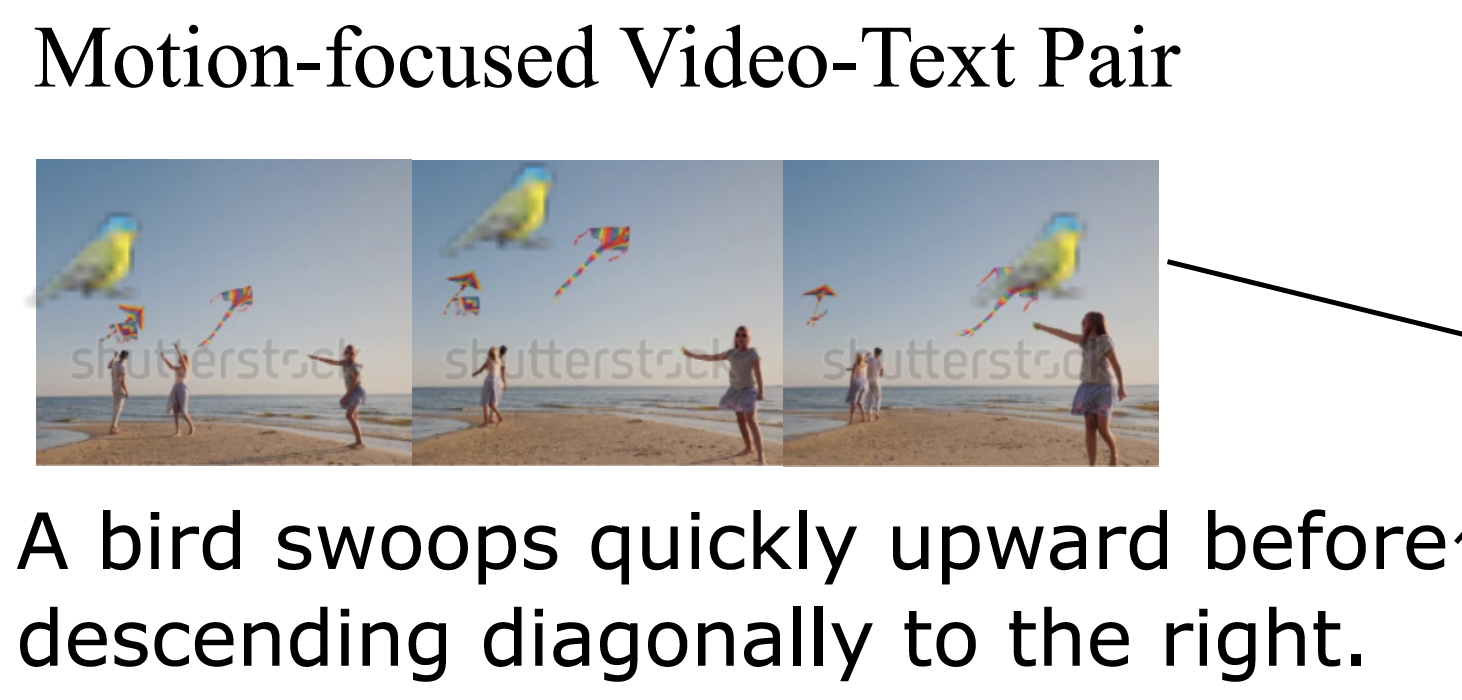

abstract = {Text-to-video diffusion models have enabled high-quality video synthesis, yet often fail to generate temporally coherent and physically plausible motion. A key reason is the models' insufficient understanding of complex motions that natural videos often entail. Recent works tackle this problem by aligning diffusion model features with those from pretrained video encoders. However, these encoders mix video appearance and dynamics into entangled features, limiting the benefit of such alignment. In this paper, we propose a motion-centric alignment framework that learns a disentangled motion subspace from a pretrained video encoder. This subspace is optimized to predict ground-truth optical flow, ensuring it captures true motion dynamics. We then align the latent features of a text-to-video diffusion model to this new subspace, enabling the generative model to internalize motion knowledge and generate more plausible videos. Our method improves the physical commonsense in a state-of-the-art video diffusion model, while preserving adherence to textual prompts, as evidenced by empirical evaluations on VideoPhy, VideoPhy2, VBench, and VBench-2.0, along with a user study.},

howpublished = {arXiv:2510.19022},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Text-to-video diffusion models have enabled high-quality video synthesis, yet often fail to generate temporally coherent and physically plausible motion. A key reason is the models' insufficient understanding of complex motions that natural videos often entail. Recent works tackle this problem by aligning diffusion model features with those from pretrained video encoders. However, these encoders mix video appearance and dynamics into entangled features, limiting the benefit of such alignment. In this paper, we propose a motion-centric alignment framework that learns a disentangled motion subspace from a pretrained video encoder. This subspace is optimized to predict ground-truth optical flow, ensuring it captures true motion dynamics. We then align the latent features of a text-to-video diffusion model to this new subspace, enabling the generative model to internalize motion knowledge and generate more plausible videos. Our method improves the physical commonsense in a state-of-the-art video diffusion model, while preserving adherence to textual prompts, as evidenced by empirical evaluations on VideoPhy, VideoPhy2, VBench, and VBench-2.0, along with a user study. |

| Filipe Laitenberger, Dawid Jan Kopiczko, Cees G M Snoek, Yuki M Asano: What Layers When: Learning to Skip Compute in LLMs with Residual Gates. In: ICLR, 2026. @inproceedings{LaitenbergerICLR2026,

title = {What Layers When: Learning to Skip Compute in LLMs with Residual Gates},

author = {Filipe Laitenberger and Dawid Jan Kopiczko and Cees G M Snoek and Yuki M Asano},

url = {https://arxiv.org/abs/2510.13876},

year = {2026},

date = {2026-04-23},

urldate = {2026-04-23},

booktitle = {ICLR},

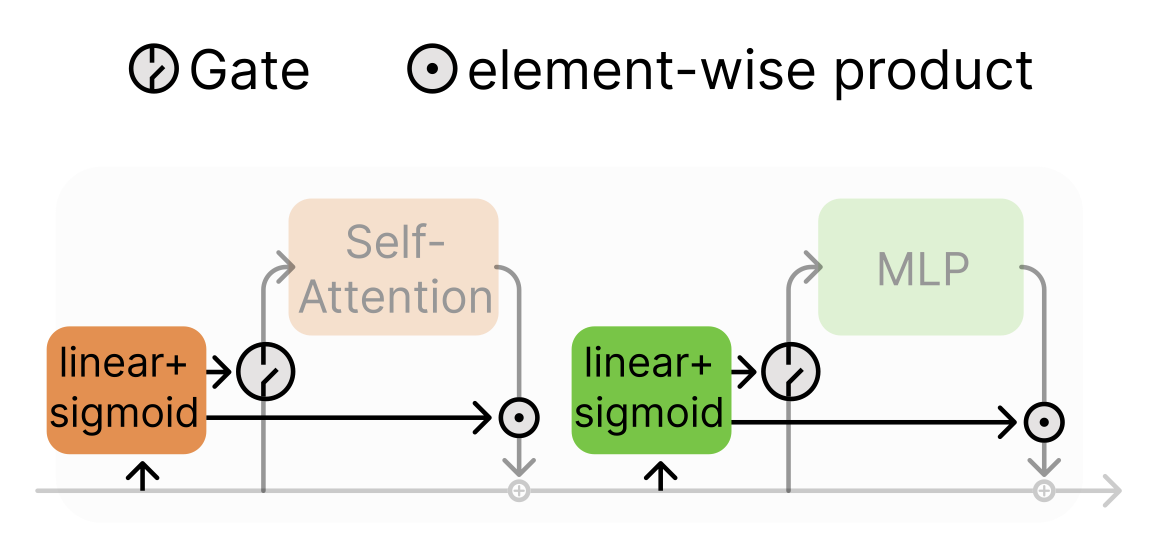

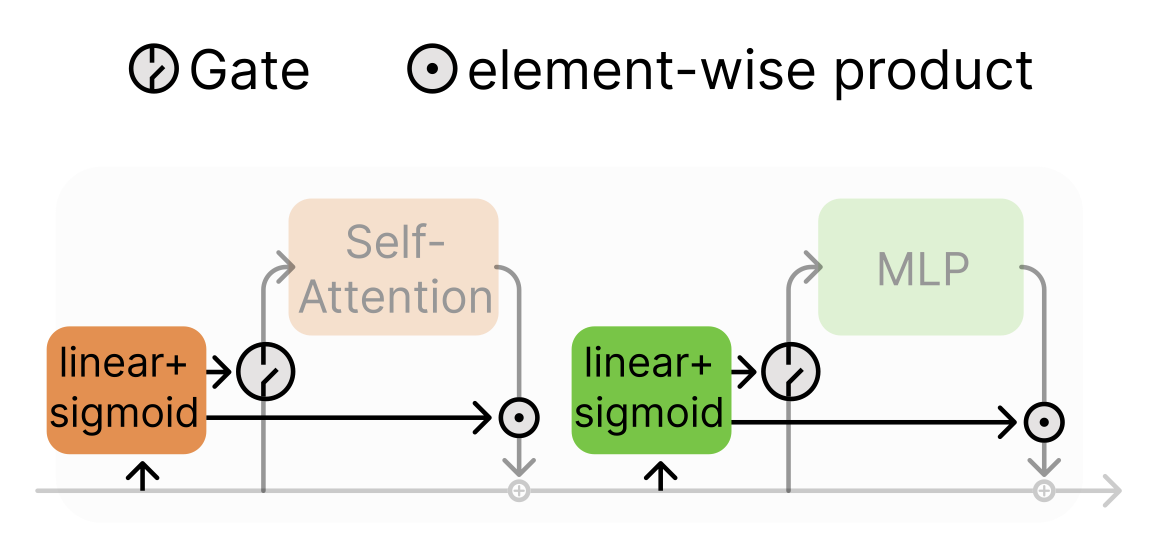

abstract = {We introduce GateSkip, a simple residual-stream gating mechanism that enables token-wise layer skipping in decoder-only LMs. Each Attention/MLP branch is equipped with a sigmoid-linear gate that condenses the branch's output before it re-enters the residual stream. During inference we rank tokens by the gate values and skip low-importance ones using a per-layer budget. While early-exit or router-based Mixture-of-Depths models are known to be unstable and need extensive retraining, our smooth, differentiable gates fine-tune stably on top of pretrained models. On long-form reasoning, we save up to 15% compute while retaining over 90% of baseline accuracy. For increasingly larger models, this tradeoff improves drastically. On instruction-tuned models we see accuracy gains at full compute and match baseline quality near 50% savings. The learned gates give insight into transformer information flow (e.g., BOS tokens act as anchors), and the method combines easily with quantization, pruning, and self-speculative decoding.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

We introduce GateSkip, a simple residual-stream gating mechanism that enables token-wise layer skipping in decoder-only LMs. Each Attention/MLP branch is equipped with a sigmoid-linear gate that condenses the branch's output before it re-enters the residual stream. During inference we rank tokens by the gate values and skip low-importance ones using a per-layer budget. While early-exit or router-based Mixture-of-Depths models are known to be unstable and need extensive retraining, our smooth, differentiable gates fine-tune stably on top of pretrained models. On long-form reasoning, we save up to 15% compute while retaining over 90% of baseline accuracy. For increasingly larger models, this tradeoff improves drastically. On instruction-tuned models we see accuracy gains at full compute and match baseline quality near 50% savings. The learned gates give insight into transformer information flow (e.g., BOS tokens act as anchors), and the method combines easily with quantization, pruning, and self-speculative decoding. |

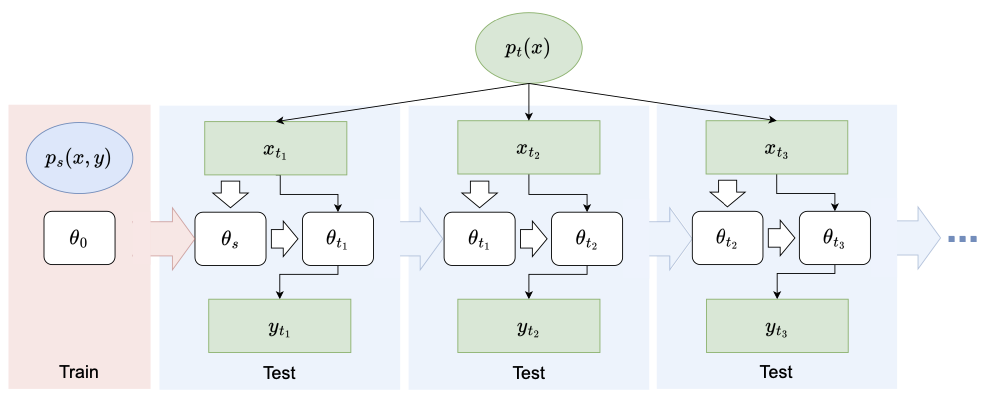

| Haohui Liang, Runlin Huang, Yingjun Du, Yujia Hu, Weifeng Su, Cees G M Snoek: Prompt-Robust Vision-Language Models via Meta-Finetuning. In: ICLR, 2026. @inproceedings{LiangICLR2026,

title = {Prompt-Robust Vision-Language Models via Meta-Finetuning},

author = {Haohui Liang and Runlin Huang and Yingjun Du and Yujia Hu and Weifeng Su and Cees G M Snoek},

year = {2026},

date = {2026-04-23},

booktitle = {ICLR},

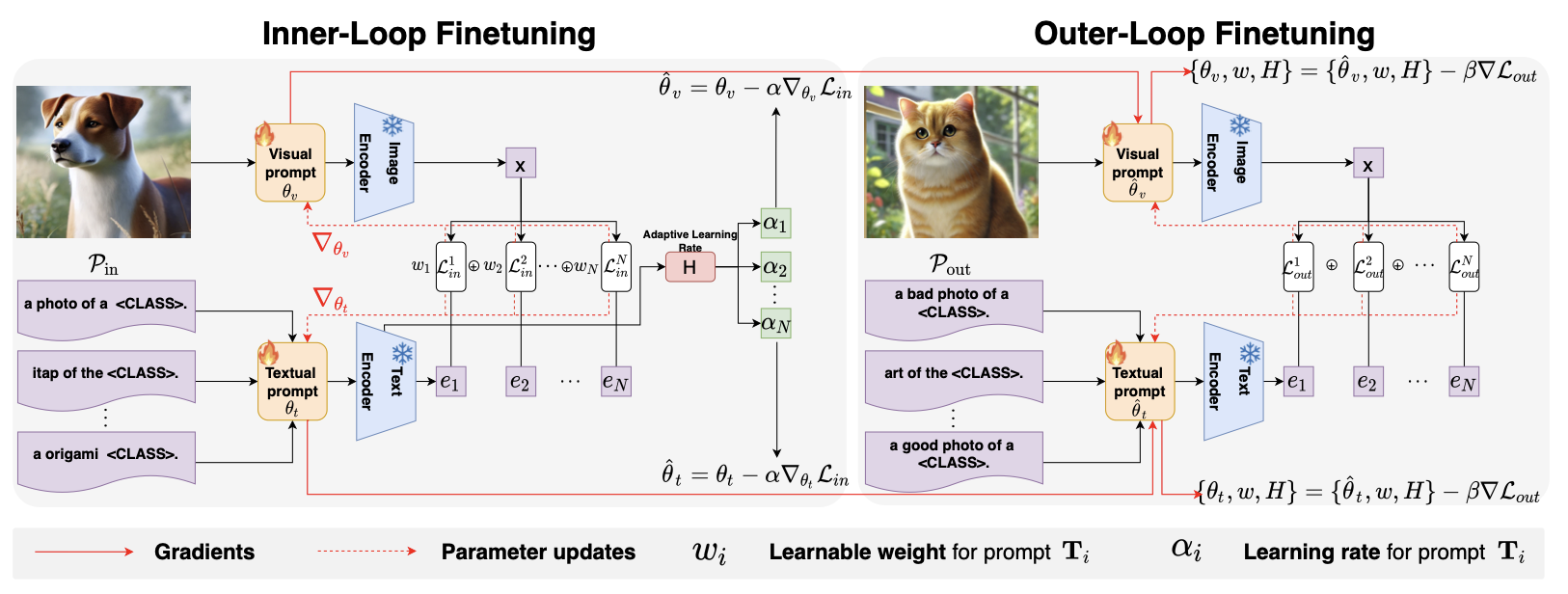

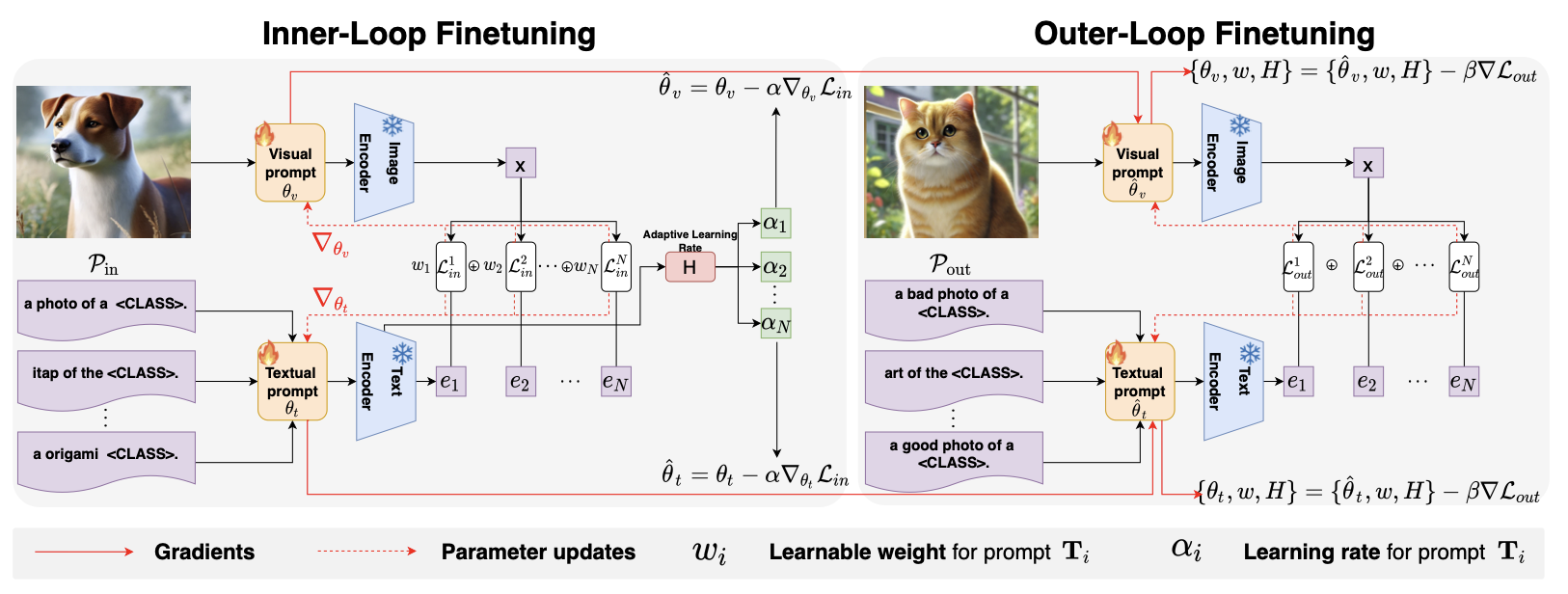

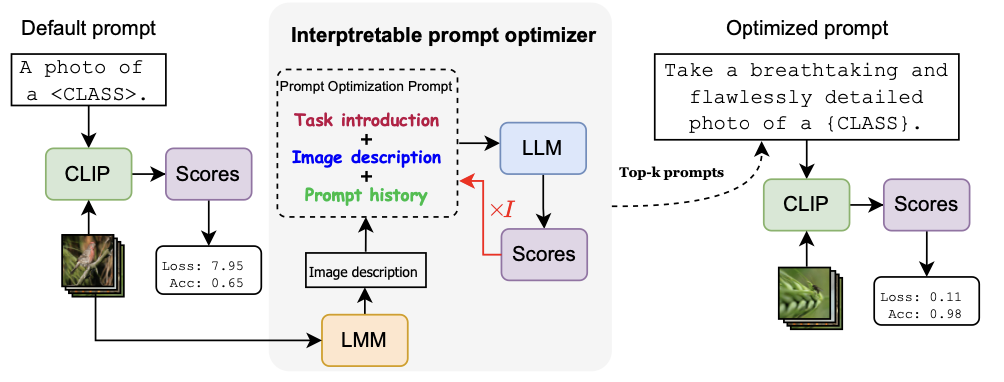

abstract = {Vision-language models (VLMs) have demonstrated remarkable generalization across diverse tasks by leveraging large-scale image-text pretraining. However, their performance is notoriously unstable under variations in natural language prompts, posing a considerable challenge for reliable real-world deployment. To address this prompt sensitivity, we propose Promise, a meta-learning framework for prompt-Robust vision-language models via meta-finetuning, which explicitly learns to generalize across diverse prompt formulations. Our method operates in a dual-loop meta-finetuning setting: the inner loop adapts token embeddings based on a set of varied prompts, while the outer loop optimizes for generalization on unseen prompt variants. To further improve robustness, we introduce an adaptive prompt weighting mechanism that dynamically emphasizes more generalizable prompts and a token-specific learning rate module that fine-tunes individual prompt tokens based on contextual importance. We further establish that Promise’s weighted and preconditioned inner update provably (i) yields a one-step decrease of the outer empirical risk together with a contraction of across-prompt sensitivity, and (ii) tightens a data-dependent generalization bound evaluated at the post-inner initialization. Across 15 benchmarks spanning base-to-novel generalization, cross-dataset transfer, and domain shift, our approach consistently reduces prompt sensitivity and improves performance stability over existing prompt learning methods.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

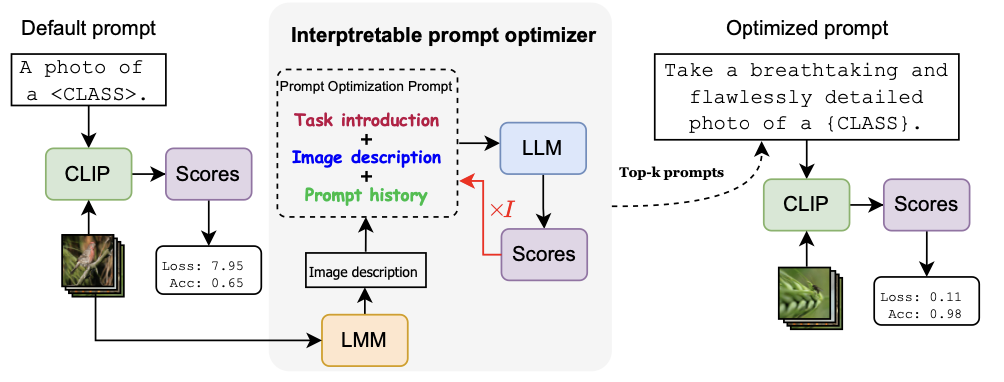

Vision-language models (VLMs) have demonstrated remarkable generalization across diverse tasks by leveraging large-scale image-text pretraining. However, their performance is notoriously unstable under variations in natural language prompts, posing a considerable challenge for reliable real-world deployment. To address this prompt sensitivity, we propose Promise, a meta-learning framework for prompt-Robust vision-language models via meta-finetuning, which explicitly learns to generalize across diverse prompt formulations. Our method operates in a dual-loop meta-finetuning setting: the inner loop adapts token embeddings based on a set of varied prompts, while the outer loop optimizes for generalization on unseen prompt variants. To further improve robustness, we introduce an adaptive prompt weighting mechanism that dynamically emphasizes more generalizable prompts and a token-specific learning rate module that fine-tunes individual prompt tokens based on contextual importance. We further establish that Promise’s weighted and preconditioned inner update provably (i) yields a one-step decrease of the outer empirical risk together with a contraction of across-prompt sensitivity, and (ii) tightens a data-dependent generalization bound evaluated at the post-inner initialization. Across 15 benchmarks spanning base-to-novel generalization, cross-dataset transfer, and domain shift, our approach consistently reduces prompt sensitivity and improves performance stability over existing prompt learning methods. |

| Matteo Nulli, Orshulevich Vladimir, Tala Bazazo, Christian Herold, Michael Kozielski, Marcin Mazur, Szymon Tuzel, Cees G M Snoek, Seyyed Hadi Hashemi, Omar Javed, Yannick Versley, Shahram Khadivi: Adapting Vision-Language Models for E-Commerce Understanding at Scale. In: EACL, 2026. @inproceedings{NulliEACL2026,

title = {Adapting Vision-Language Models for E-Commerce Understanding at Scale},

author = {Matteo Nulli and Orshulevich Vladimir and Tala Bazazo and Christian Herold and Michael Kozielski and Marcin Mazur and Szymon Tuzel and Cees G M Snoek and Seyyed Hadi Hashemi and Omar Javed and Yannick Versley and Shahram Khadivi},

year = {2026},

date = {2026-03-24},

booktitle = {EACL},

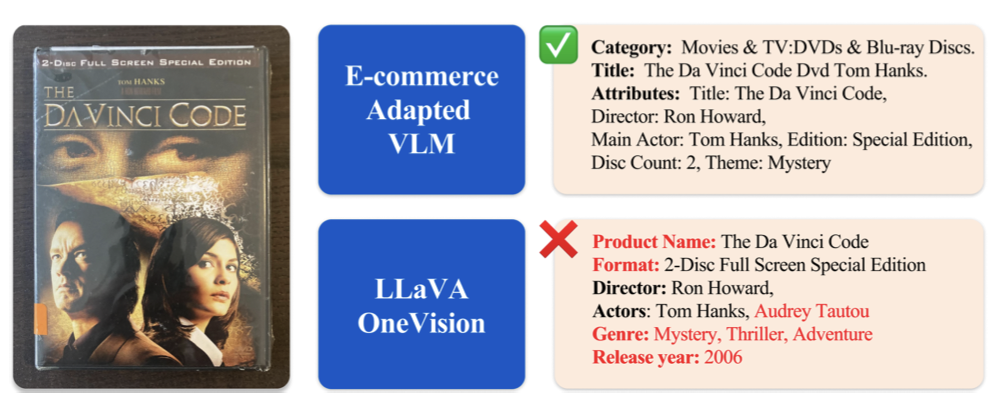

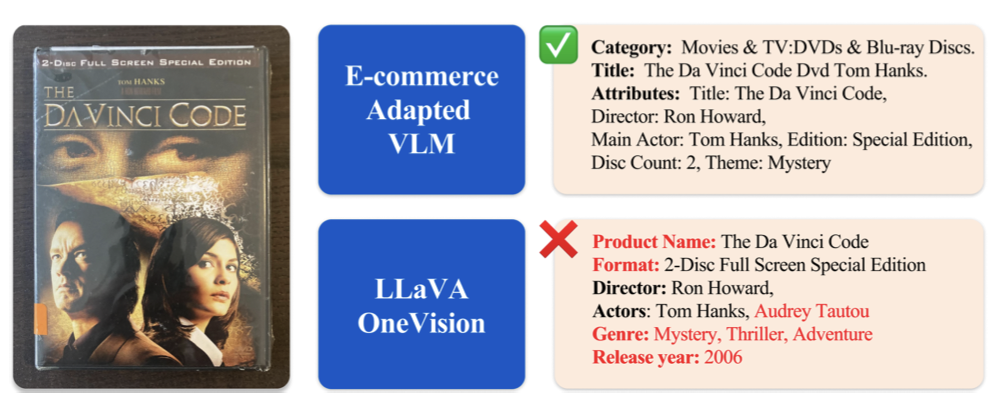

abstract = {E-commerce product understanding demands by nature, strong multimodal comprehension from text, images, and structured attributes. General-purpose Vision–Language Models (VLMs) enable generalizable multimodal latent modelling, yet there is no documented, well-known strategy for adapting them to the attribute-centric, multi-image, and noisy nature of e-commerce data, without sacrificing general performance. In this work, we show through a large-scale experimental study, how targeted adaptation of general VLMs can substantially improve e-commerce performance while preserving broad multimodal capabilities. Furthermore, we propose a novel extensive evaluation suite covering deep product understanding, strict instruction following, and dynamic attribute extraction.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

E-commerce product understanding demands by nature, strong multimodal comprehension from text, images, and structured attributes. General-purpose Vision–Language Models (VLMs) enable generalizable multimodal latent modelling, yet there is no documented, well-known strategy for adapting them to the attribute-centric, multi-image, and noisy nature of e-commerce data, without sacrificing general performance. In this work, we show through a large-scale experimental study, how targeted adaptation of general VLMs can substantially improve e-commerce performance while preserving broad multimodal capabilities. Furthermore, we propose a novel extensive evaluation suite covering deep product understanding, strict instruction following, and dynamic attribute extraction. |

| Wenfang Sun, Yingjun Du, Gaowen Liu, Cees G M Snoek: QUOTA: Quantifying Objects with Text-to-Image Models for Any Domain. In: WACV, 2026. @inproceedings{SunWACV2026,

title = {QUOTA: Quantifying Objects with Text-to-Image Models for Any Domain},

author = {Wenfang Sun and Yingjun Du and Gaowen Liu and Cees G M Snoek},

url = {https://arxiv.org/abs/2411.19534},

year = {2026},

date = {2026-03-06},

urldate = {2024-11-29},

booktitle = {WACV},

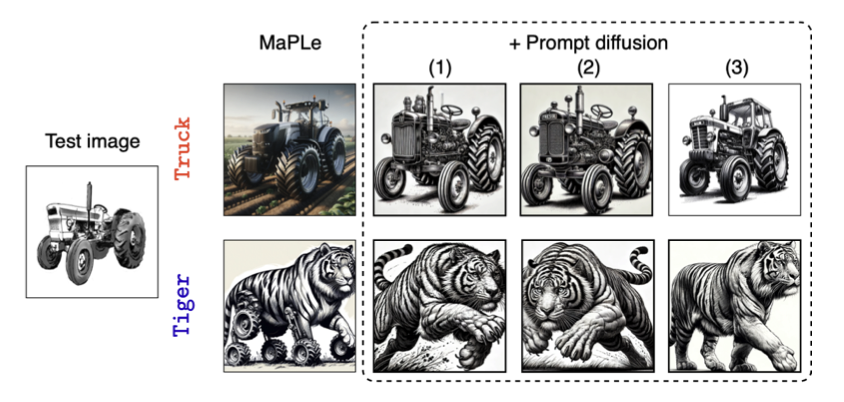

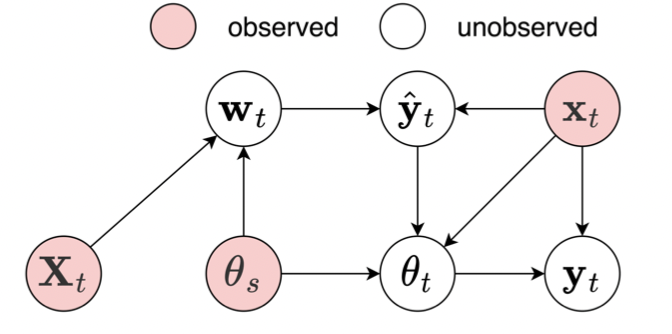

abstract = {We tackle the problem of quantifying the number of objects by a generative text-to-image model. Rather than retraining such a model for each new image domain of interest, which leads to high computational costs and limited scalability, we are the first to consider this problem from a domain-agnostic perspective. We propose QUOTA, an optimization framework for text-to-image models that enables effective object quantification across unseen domains without retraining. It leverages a dual-loop meta-learning strategy to optimize a domain-invariant prompt. Further, by integrating prompt learning with learnable counting and domain tokens, our method captures stylistic variations and maintains accuracy, even for object classes not encountered during training. For evaluation, we adopt a new benchmark specifically designed for object quantification in domain generalization, enabling rigorous assessment of object quantification accuracy and adaptability across unseen domains in text-to-image generation. Extensive experiments demonstrate that QUOTA outperforms conventional models in both object quantification accuracy and semantic consistency, setting a new benchmark for efficient and scalable text-to-image generation for any domain.},

howpublished = {arXiv:2411.19534},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

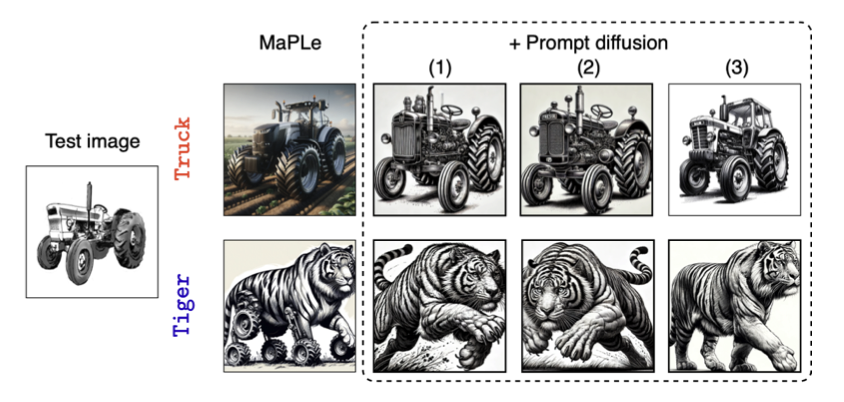

We tackle the problem of quantifying the number of objects by a generative text-to-image model. Rather than retraining such a model for each new image domain of interest, which leads to high computational costs and limited scalability, we are the first to consider this problem from a domain-agnostic perspective. We propose QUOTA, an optimization framework for text-to-image models that enables effective object quantification across unseen domains without retraining. It leverages a dual-loop meta-learning strategy to optimize a domain-invariant prompt. Further, by integrating prompt learning with learnable counting and domain tokens, our method captures stylistic variations and maintains accuracy, even for object classes not encountered during training. For evaluation, we adopt a new benchmark specifically designed for object quantification in domain generalization, enabling rigorous assessment of object quantification accuracy and adaptability across unseen domains in text-to-image generation. Extensive experiments demonstrate that QUOTA outperforms conventional models in both object quantification accuracy and semantic consistency, setting a new benchmark for efficient and scalable text-to-image generation for any domain. |

| Jie Ou, Shuaihong Jiang, Yingjun Du, Cees G M Snoek: GateRA: Token-Aware Modulation for Parameter-Efficient Fine-Tuning. In: AAAI, 2026. @inproceedings{OuAAAI2026,

title = {GateRA: Token-Aware Modulation for Parameter-Efficient Fine-Tuning},

author = {Jie Ou and Shuaihong Jiang and Yingjun Du and Cees G M Snoek},

url = {https://arxiv.org/abs/2511.17582},

year = {2026},

date = {2026-01-20},

urldate = {2026-01-20},

booktitle = {AAAI},

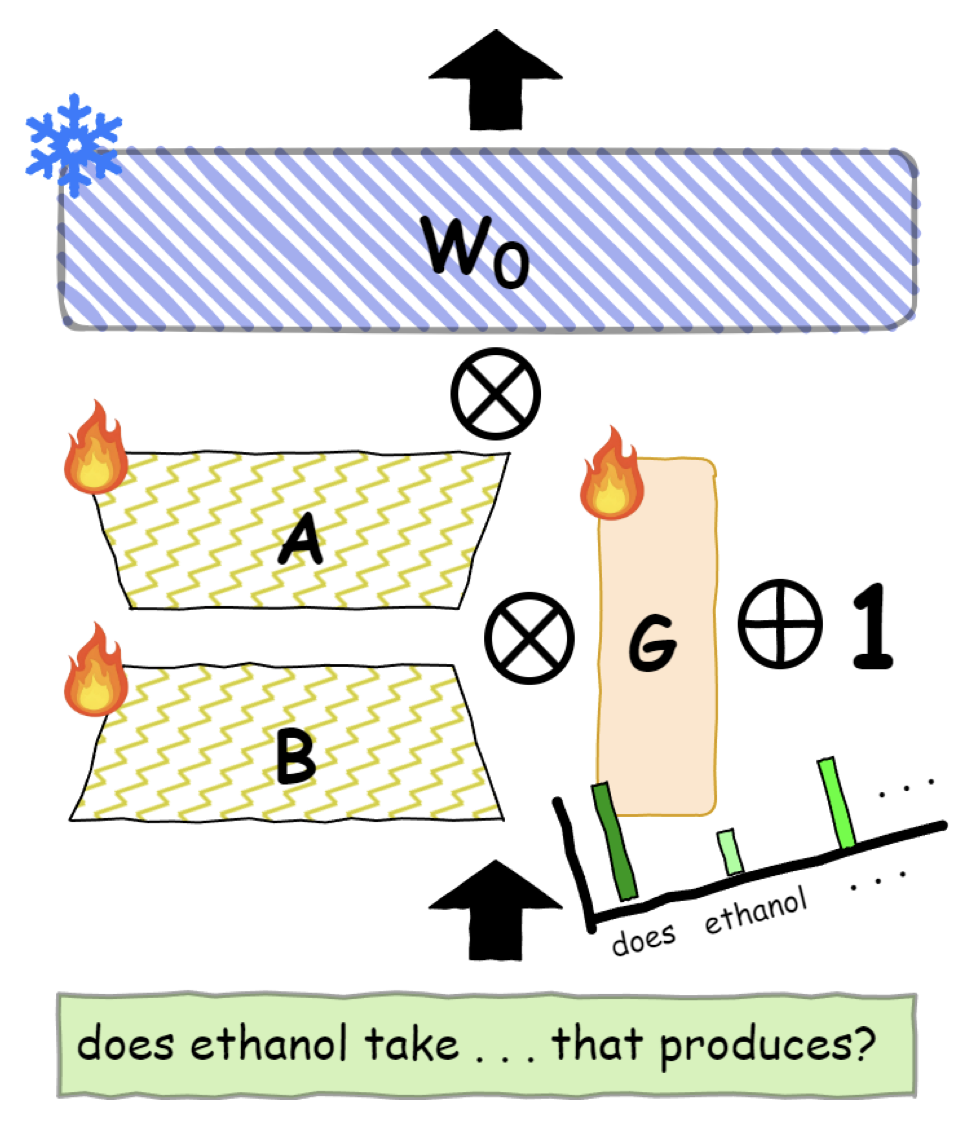

abstract = {Parameter-efficient fine-tuning (PEFT) methods, such as LoRA, DoRA, and HiRA, enable lightweight adaptation of large pre-trained models via low-rank updates. However, existing PEFT approaches apply static, input-agnostic updates to all tokens, disregarding the varying importance and difficulty of different inputs. This uniform treatment can lead to overfitting on trivial content or under-adaptation on more informative regions, especially in autoregressive settings with distinct prefill and decoding dynamics. In this paper, we propose GateRA, a unified framework that introduces token-aware modulation to dynamically adjust the strength of PEFT updates. By incorporating adaptive gating into standard PEFT branches, GateRA enables selective, token-level adaptation, preserving pre-trained knowledge for well-modeled inputs while focusing capacity on challenging cases. Empirical visualizations reveal phase-sensitive behaviors, where GateRA automatically suppresses updates for redundant prefill tokens while emphasizing adaptation during decoding. To promote confident and efficient modulation, we further introduce an entropy-based regularization that encourages near-binary gating decisions. This regularization prevents diffuse update patterns and leads to interpretable, sparse adaptation without hard thresholding. Finally, we present a theoretical analysis showing that GateRA induces a soft gradient-masking effect over the PEFT path, enabling continuous and differentiable control over adaptation. Experiments on multiple commonsense reasoning benchmarks demonstrate that GateRA consistently outperforms or matches prior PEFT methods.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Parameter-efficient fine-tuning (PEFT) methods, such as LoRA, DoRA, and HiRA, enable lightweight adaptation of large pre-trained models via low-rank updates. However, existing PEFT approaches apply static, input-agnostic updates to all tokens, disregarding the varying importance and difficulty of different inputs. This uniform treatment can lead to overfitting on trivial content or under-adaptation on more informative regions, especially in autoregressive settings with distinct prefill and decoding dynamics. In this paper, we propose GateRA, a unified framework that introduces token-aware modulation to dynamically adjust the strength of PEFT updates. By incorporating adaptive gating into standard PEFT branches, GateRA enables selective, token-level adaptation, preserving pre-trained knowledge for well-modeled inputs while focusing capacity on challenging cases. Empirical visualizations reveal phase-sensitive behaviors, where GateRA automatically suppresses updates for redundant prefill tokens while emphasizing adaptation during decoding. To promote confident and efficient modulation, we further introduce an entropy-based regularization that encourages near-binary gating decisions. This regularization prevents diffuse update patterns and leads to interpretable, sparse adaptation without hard thresholding. Finally, we present a theoretical analysis showing that GateRA induces a soft gradient-masking effect over the PEFT path, enabling continuous and differentiable control over adaptation. Experiments on multiple commonsense reasoning benchmarks demonstrate that GateRA consistently outperforms or matches prior PEFT methods. |

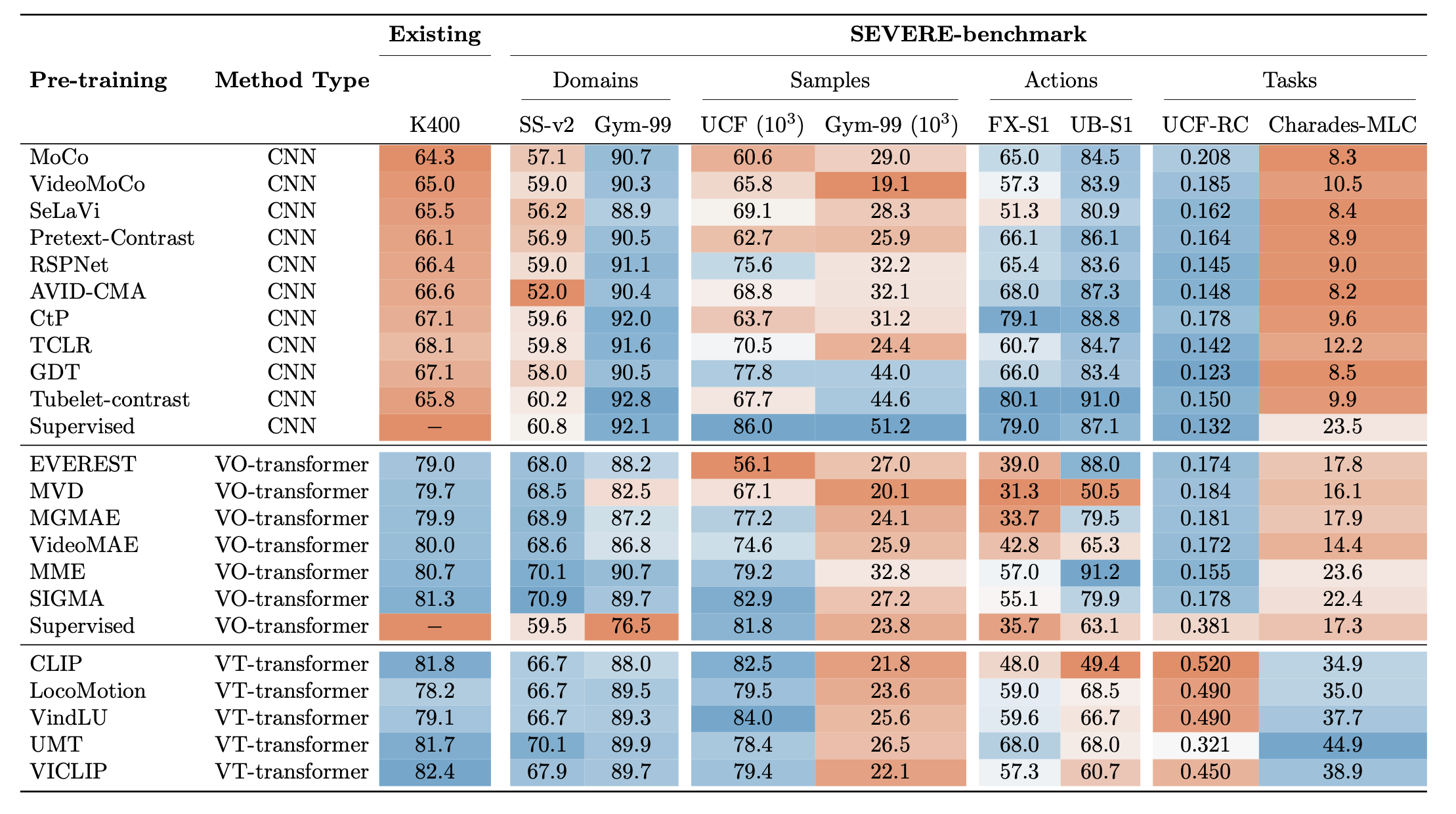

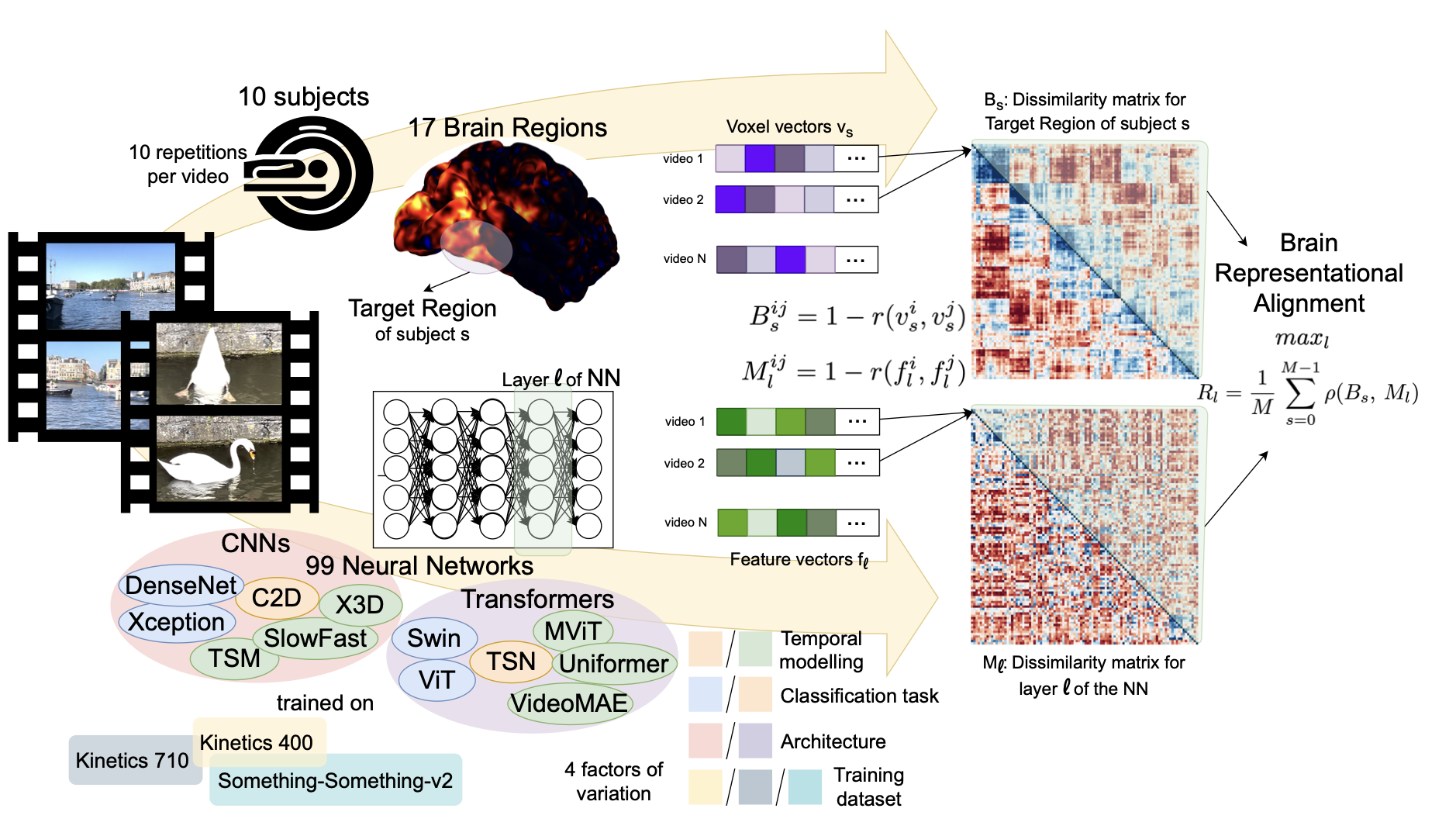

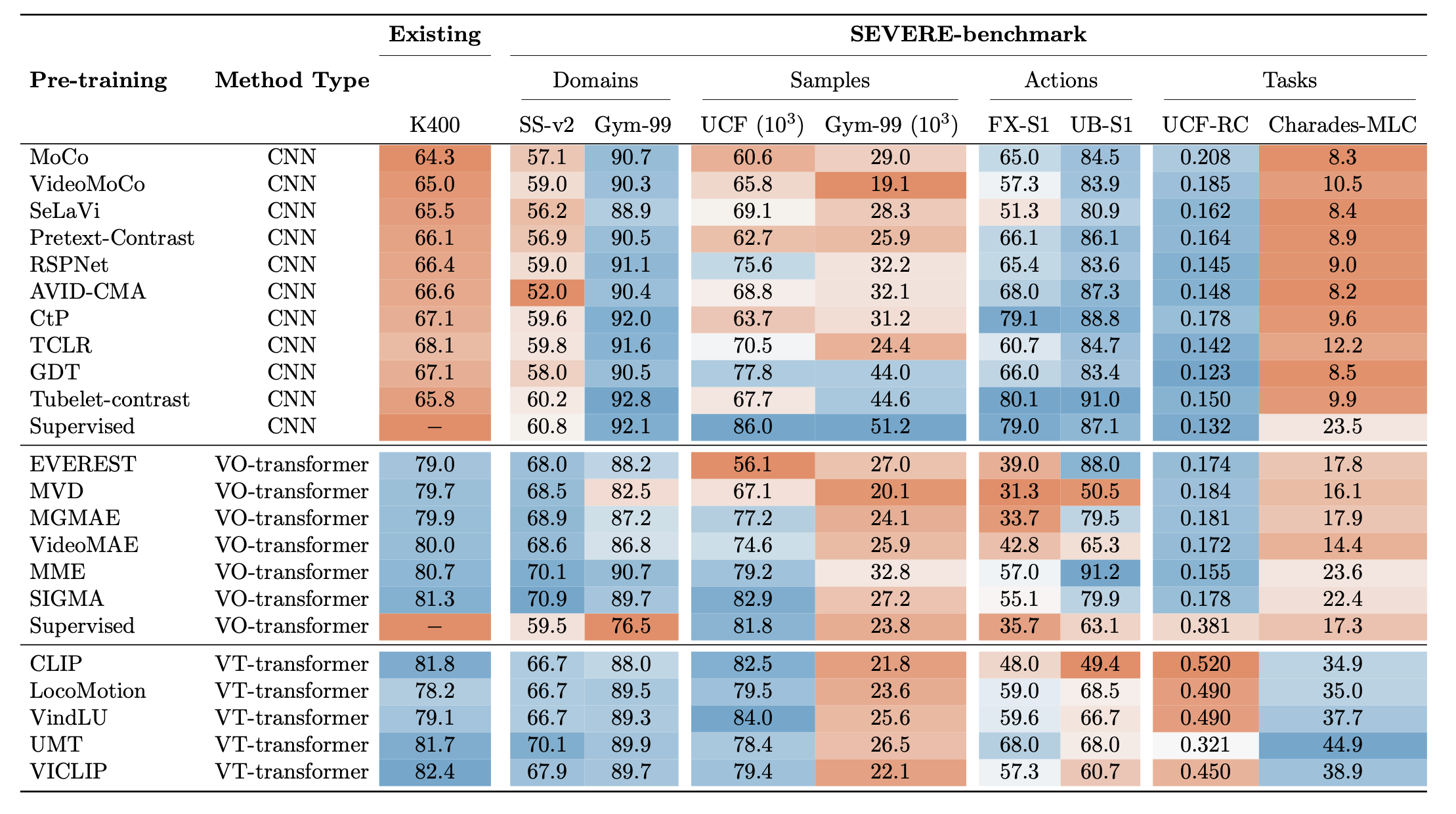

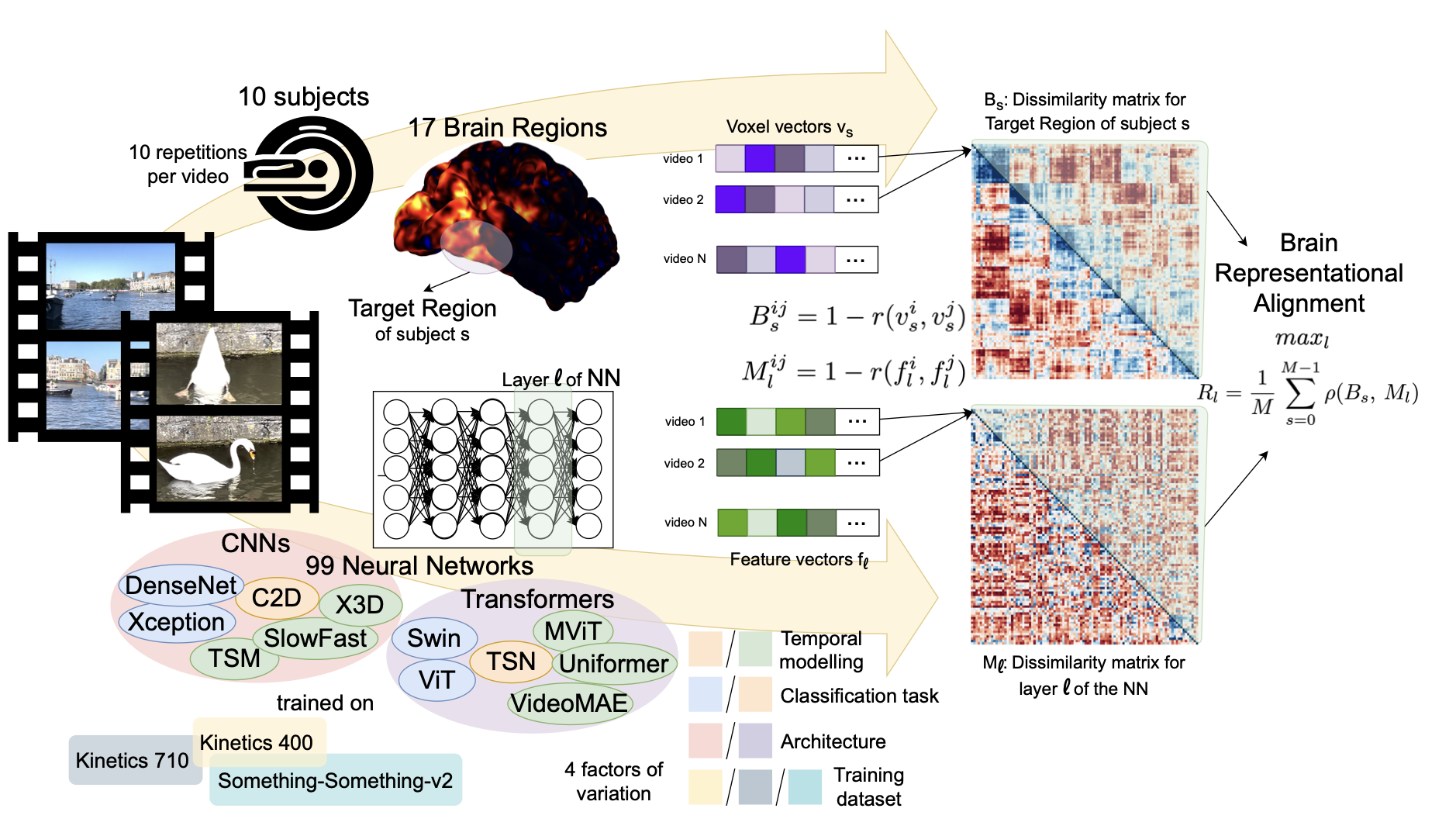

| Fida Mohammad Thoker, Letian Jiang, Chen Zhao, Piyush Bagad, Hazel Doughty, Bernard Ghanem, Cees G M Snoek: SEVERE++: Evaluating Benchmark Sensitivity in Generalization of Video Representation Learning. In: International Journal of Computer Vision, 2026, (Submitted.). @article{ThokerIJCV2025,

title = {SEVERE++: Evaluating Benchmark Sensitivity in Generalization of Video Representation Learning},

author = {Fida Mohammad Thoker and Letian Jiang and Chen Zhao and Piyush Bagad and Hazel Doughty and Bernard Ghanem and Cees G M Snoek},

url = {https://arxiv.org/abs/2504.05706},

year = {2026},

date = {2026-01-01},

urldate = {2025-04-08},

journal = {International Journal of Computer Vision},

abstract = {Continued advances in self-supervised learning have led to significant progress in video representation learning, offering a scalable alternative to supervised approaches by removing the need for manual annotations. Despite strong performance on standard action recognition benchmarks, video self-supervised learning methods are largely evaluated under narrow protocols, typically pretraining on Kinetics-400 and fine-tuning on similar datasets, limiting our understanding of their generalization in real world scenarios. In this work, we present a comprehensive evaluation of modern video self-supervised models, focusing on generalization across four key downstream factors: domain shift, sample efficiency, action granularity, and task diversity. Building on our prior work analyzing benchmark sensitivity in CNN-based contrastive learning, we extend the study to cover state-of-the-art transformer-based video-only and video-text models. Specifically, we benchmark 12 transformer-based methods (7 video-only, 5 video-text) and compare them to 10 CNN-based methods, totaling over 1100 experiments across 8 datasets and 7 downstream tasks. Our analysis shows that, despite architectural advances, transformer-based models remain sensitive to downstream conditions. No method generalizes consistently across all factors, video-only transformers perform better under domain shifts, CNNs outperform for fine-grained tasks, and video-text models often underperform despite large scale pretraining. We also find that recent transformer models do not consistently outperform earlier approaches. Our findings provide a detailed view of the strengths and limitations of current video SSL methods and offer a unified benchmark for evaluating generalization in video representation learning.},

note = {Submitted.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Continued advances in self-supervised learning have led to significant progress in video representation learning, offering a scalable alternative to supervised approaches by removing the need for manual annotations. Despite strong performance on standard action recognition benchmarks, video self-supervised learning methods are largely evaluated under narrow protocols, typically pretraining on Kinetics-400 and fine-tuning on similar datasets, limiting our understanding of their generalization in real world scenarios. In this work, we present a comprehensive evaluation of modern video self-supervised models, focusing on generalization across four key downstream factors: domain shift, sample efficiency, action granularity, and task diversity. Building on our prior work analyzing benchmark sensitivity in CNN-based contrastive learning, we extend the study to cover state-of-the-art transformer-based video-only and video-text models. Specifically, we benchmark 12 transformer-based methods (7 video-only, 5 video-text) and compare them to 10 CNN-based methods, totaling over 1100 experiments across 8 datasets and 7 downstream tasks. Our analysis shows that, despite architectural advances, transformer-based models remain sensitive to downstream conditions. No method generalizes consistently across all factors, video-only transformers perform better under domain shifts, CNNs outperform for fine-grained tasks, and video-text models often underperform despite large scale pretraining. We also find that recent transformer models do not consistently outperform earlier approaches. Our findings provide a detailed view of the strengths and limitations of current video SSL methods and offer a unified benchmark for evaluating generalization in video representation learning. |

| Piyush Bagad, Makarand Tapaswi, Cees G M Snoek, Andrew Zisserman: The Sound of Water: Inferring Physical Properties from Pouring Liquids. In: IEEE Transactions on Pattern Analysis and Machine Intelligence, 2026, (Pending minor revision). @article{BagadTPAMI2026,

title = {The Sound of Water: Inferring Physical Properties from Pouring Liquids},

author = {Piyush Bagad and Makarand Tapaswi and Cees G M Snoek and Andrew Zisserman},

url = {https://arxiv.org/abs/2411.11222},

year = {2026},

date = {2026-01-01},

urldate = {2025-10-23},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

note = {Pending minor revision},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

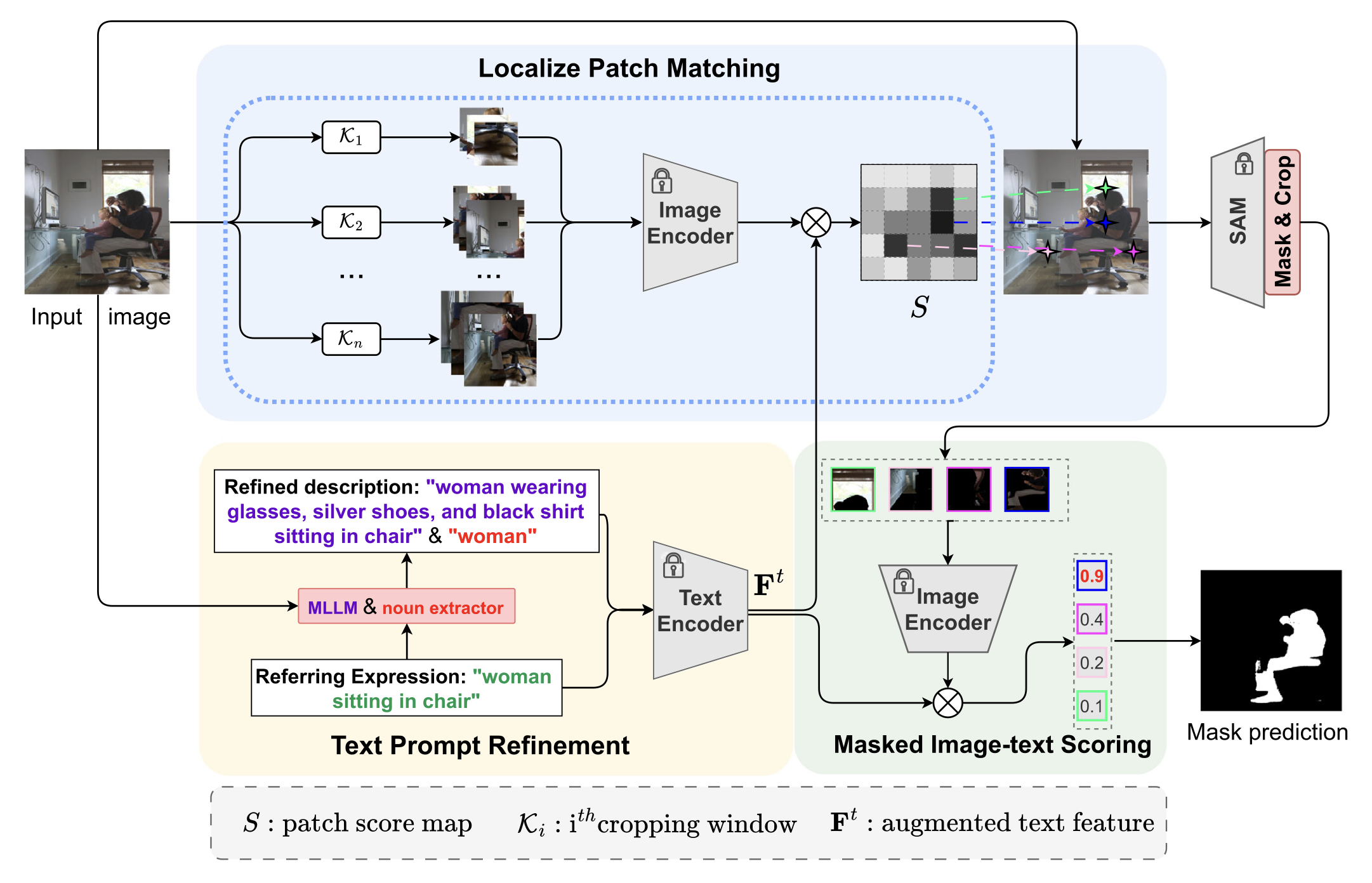

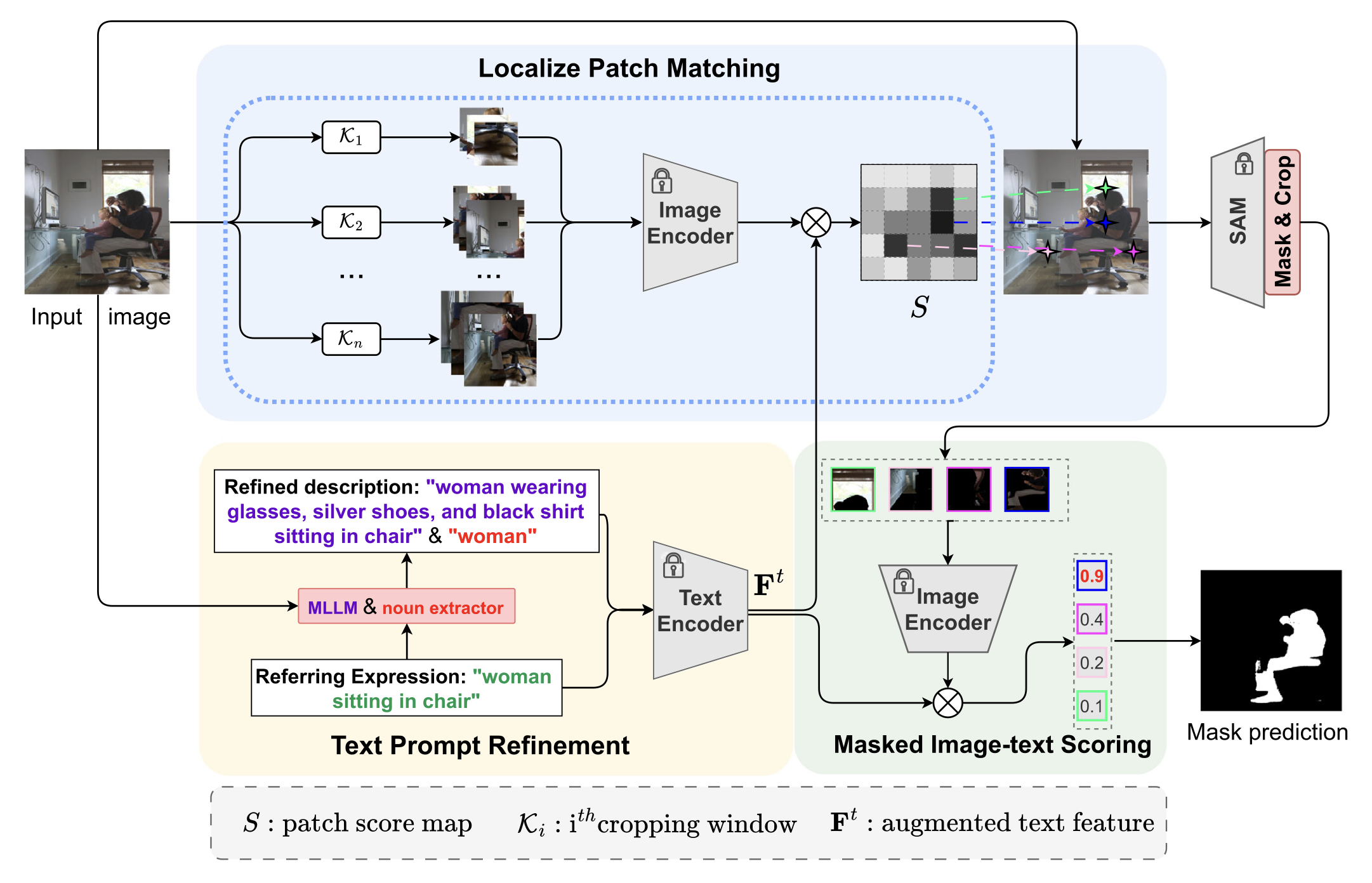

| Lei Zhang, Yongqiu Huang, Yingjun Du, Fang Lei, Zhiying Yang, Cees G M Snoek, Yehui Wang: LoTeR: Localized text prompt refinement for zero-shot referring image segmentation. In: Computer Vision and Image Understanding, vol. 263, iss. January, no. 104596, 2026. @article{ZhangCVIU2026,

title = {LoTeR: Localized text prompt refinement for zero-shot referring image segmentation},

author = {Lei Zhang and Yongqiu Huang and Yingjun Du, Fang Lei and Zhiying Yang and Cees G M Snoek and Yehui Wang},

url = {https://www.sciencedirect.com/science/article/pii/S1077314225003194},

doi = {https://doi.org/10.1016/j.cviu.2025.104596},

year = {2026},

date = {2026-01-01},

journal = {Computer Vision and Image Understanding},

volume = {263},

number = {104596},

issue = {January},

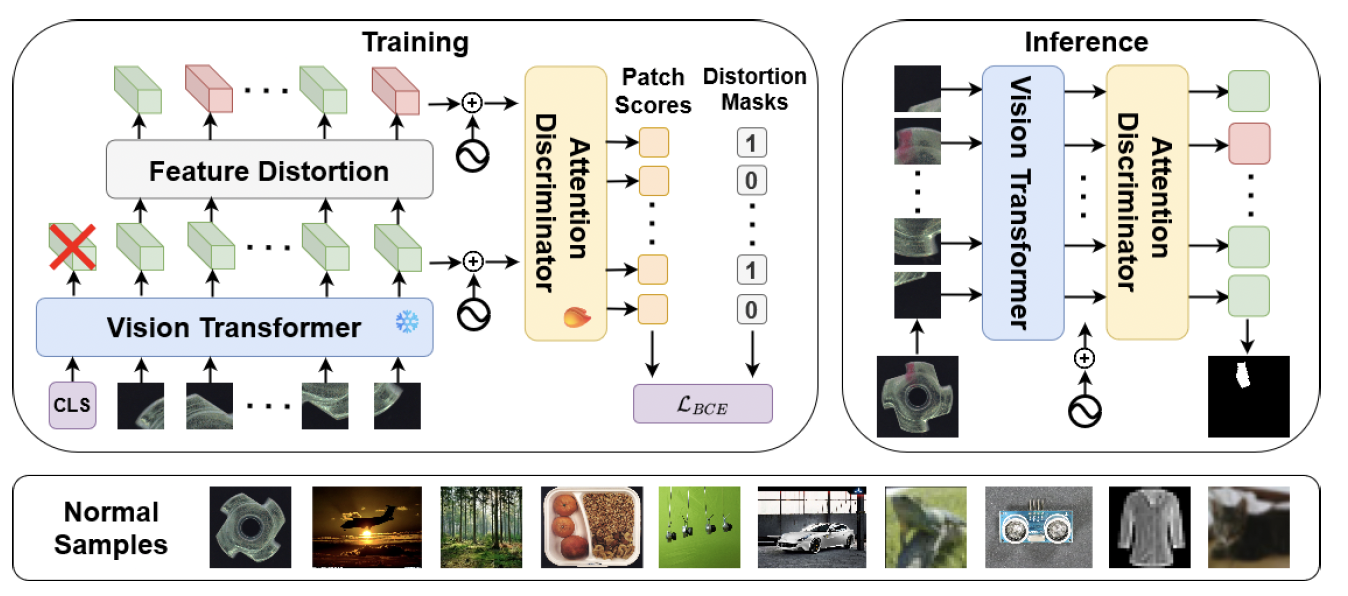

abstract = {This paper addresses the challenge of segmenting an object in an image based solely on a textual description, without requiring any training on specific object classes. In contrast to traditional methods that rely on generating numerous mask proposals, we introduce a novel patch-based approach. Our method computes the similarity between small image patches, extracted using a sliding window, and textual descriptions, producing a patch score map that identifies the regions most likely to contain the target object. This score map guides a segment-anything model to generate precise mask proposals. To further improve segmentation accuracy, we refine the textual prompts by generating detailed object descriptions using a multi-modal large language model. Our method’s effectiveness is validated through extensive experiments on the RefCOCO, RefCOCO+, and RefCOCOg datasets, where it outperforms state-of-the-art zero-shot referring image segmentation methods. Ablation studies confirm the key contributions of our patch-based segmentation and localized text prompt refinement, demonstrating their significant role in enhancing both precision and robustness.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

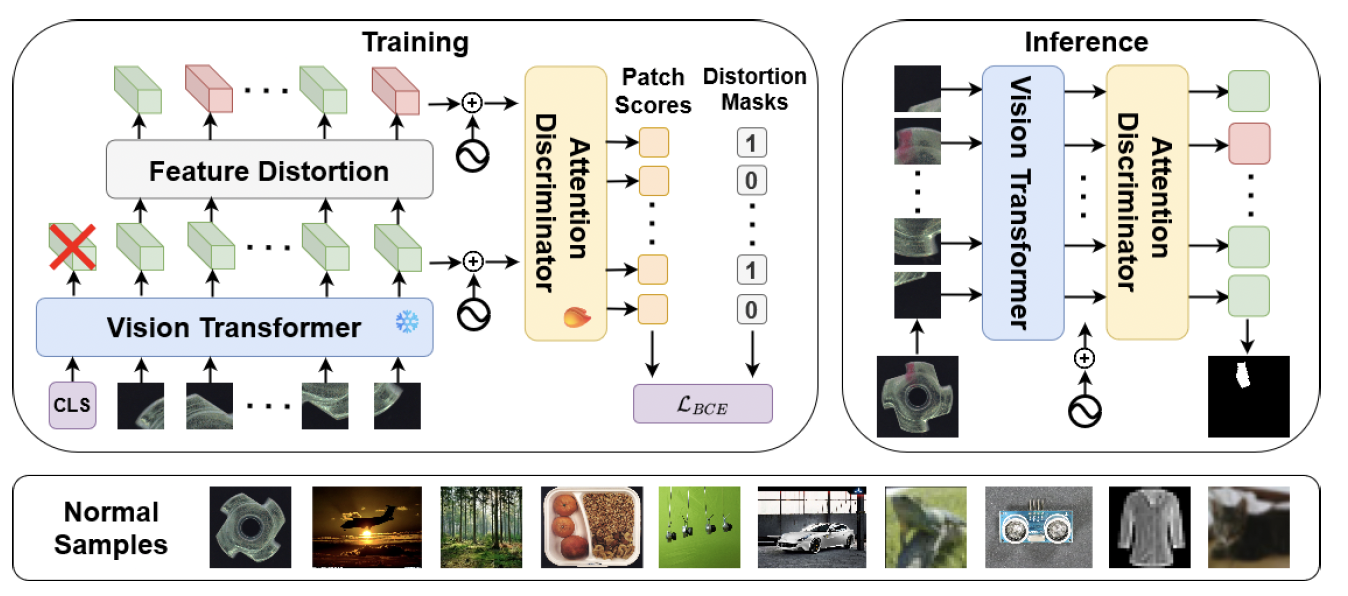

This paper addresses the challenge of segmenting an object in an image based solely on a textual description, without requiring any training on specific object classes. In contrast to traditional methods that rely on generating numerous mask proposals, we introduce a novel patch-based approach. Our method computes the similarity between small image patches, extracted using a sliding window, and textual descriptions, producing a patch score map that identifies the regions most likely to contain the target object. This score map guides a segment-anything model to generate precise mask proposals. To further improve segmentation accuracy, we refine the textual prompts by generating detailed object descriptions using a multi-modal large language model. Our method’s effectiveness is validated through extensive experiments on the RefCOCO, RefCOCO+, and RefCOCOg datasets, where it outperforms state-of-the-art zero-shot referring image segmentation methods. Ablation studies confirm the key contributions of our patch-based segmentation and localized text prompt refinement, demonstrating their significant role in enhancing both precision and robustness. |

2025

|

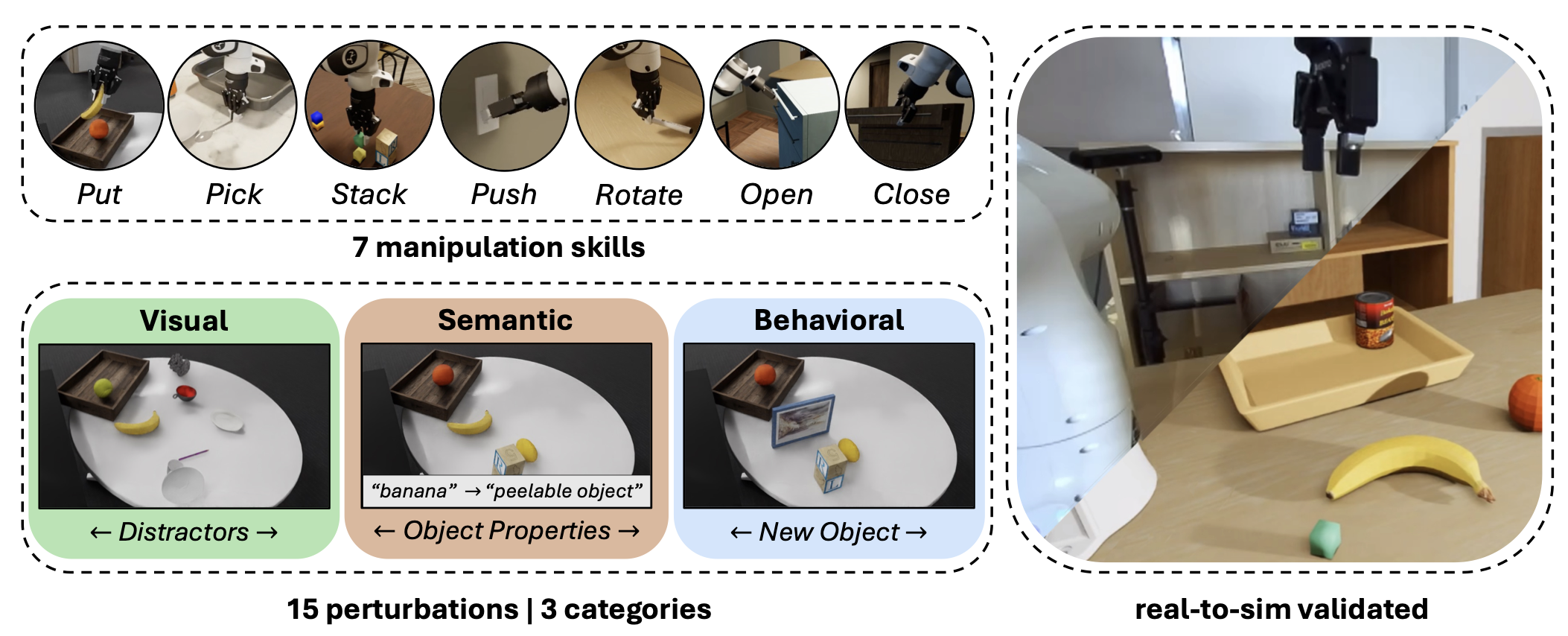

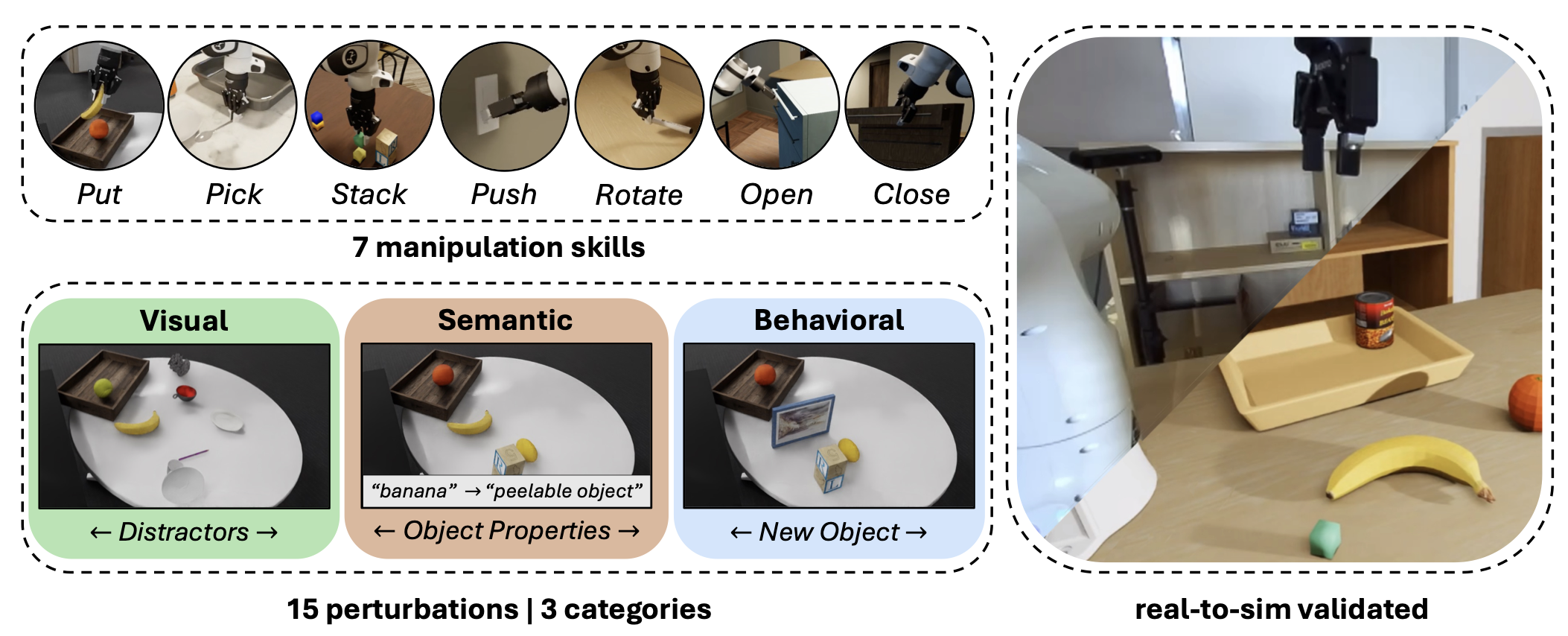

| Martin Sedlacek, Pavlo Yefanov, Georgy Ponimatkin, Jai Bardhan, Simon Pilc, Mederic Fourmy, Evangelos Kazakos, Cees G M Snoek, Josef Sivic, Vladimir Petrik: REALM: A Real-to-Sim Validated Benchmark for Generalization in Robotic Manipulation. arXiv:2512.19562, 2025. @unpublished{SedlacekArxiv2025,

title = {REALM: A Real-to-Sim Validated Benchmark for Generalization in Robotic Manipulation},

author = {Martin Sedlacek and Pavlo Yefanov and Georgy Ponimatkin and Jai Bardhan and Simon Pilc and Mederic Fourmy and Evangelos Kazakos and Cees G M Snoek and Josef Sivic and Vladimir Petrik},

url = {https://arxiv.org/abs/2512.19562

https://martin-sedlacek.com/realm/

https://github.com/martin-sedlacek/REALM},

year = {2025},

date = {2025-12-22},

urldate = {2025-12-22},

abstract = {Vision-Language-Action (VLA) models empower robots to understand and execute tasks described by natural language instructions. However, a key challenge lies in their ability to generalize beyond the specific environments and conditions they were trained on, which is presently difficult and expensive to evaluate in the real-world. To address this gap, we present REALM, a new simulation environment and benchmark designed to evaluate the generalization capabilities of VLA models, with a specific emphasis on establishing a strong correlation between simulated and real-world performance through high-fidelity visuals and aligned robot control. Our environment offers a suite of 15 perturbation factors, 7 manipulation skills, and more than 3,500 objects. Finally, we establish two task sets that form our benchmark and evaluate the pi_{0}, pi_{0}-FAST, and GR00T N1.5 VLA models, showing that generalization and robustness remain an open challenge. More broadly, we also show that simulation gives us a valuable proxy for the real-world and allows us to systematically probe for and quantify the weaknesses and failure modes of VLAs.},

howpublished = {arXiv:2512.19562},

keywords = {},

pubstate = {published},

tppubtype = {unpublished}

}

Vision-Language-Action (VLA) models empower robots to understand and execute tasks described by natural language instructions. However, a key challenge lies in their ability to generalize beyond the specific environments and conditions they were trained on, which is presently difficult and expensive to evaluate in the real-world. To address this gap, we present REALM, a new simulation environment and benchmark designed to evaluate the generalization capabilities of VLA models, with a specific emphasis on establishing a strong correlation between simulated and real-world performance through high-fidelity visuals and aligned robot control. Our environment offers a suite of 15 perturbation factors, 7 manipulation skills, and more than 3,500 objects. Finally, we establish two task sets that form our benchmark and evaluate the pi_{0}, pi_{0}-FAST, and GR00T N1.5 VLA models, showing that generalization and robustness remain an open challenge. More broadly, we also show that simulation gives us a valuable proxy for the real-world and allows us to systematically probe for and quantify the weaknesses and failure modes of VLAs. |

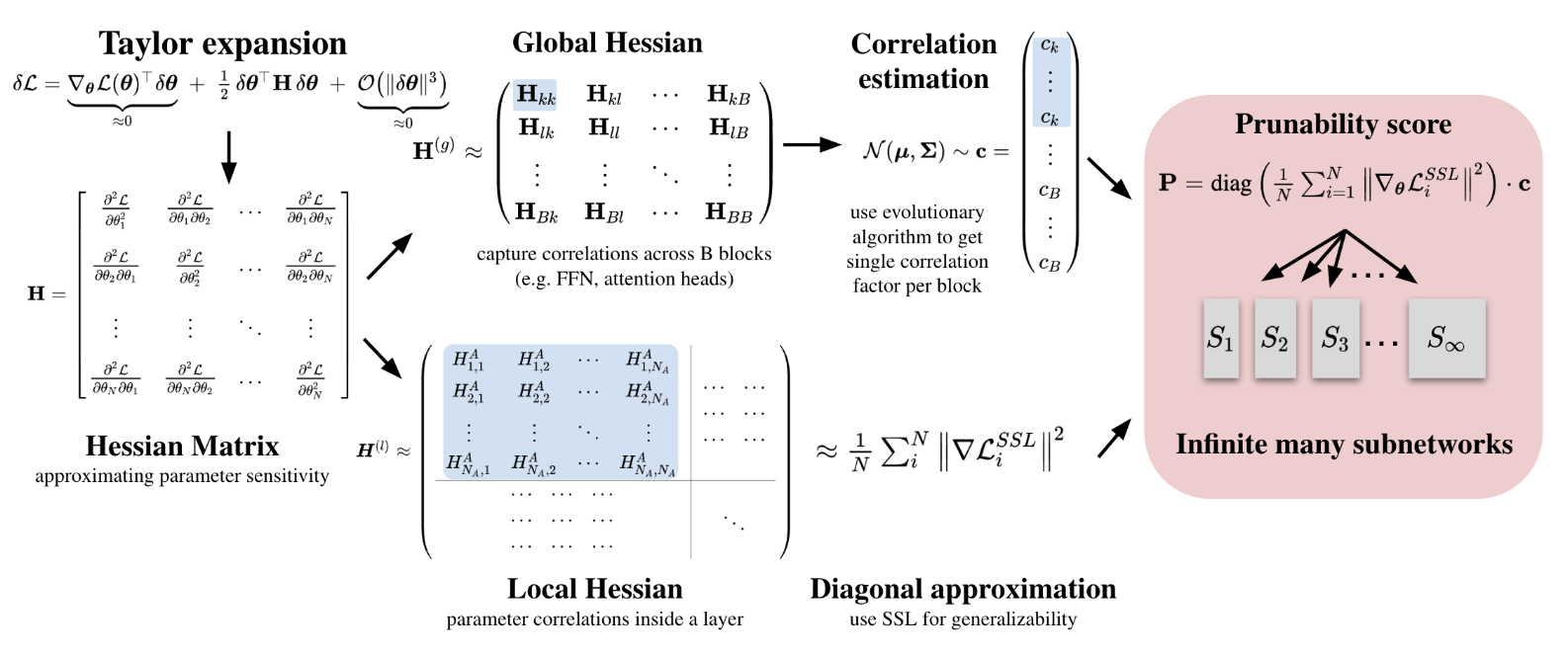

| Walter Simoncini, Michael Dorkenwald, Tijmen Blankevoort, Cees G M Snoek, Yuki M Asano: Elastic ViTs from Pretrained Models without Retraining. In: NeurIPS, 2025. @inproceedings{SimonciniNeurips25,

title = {Elastic ViTs from Pretrained Models without Retraining},

author = {Walter Simoncini and Michael Dorkenwald and Tijmen Blankevoort and Cees G M Snoek and Yuki M Asano},

url = {https://arxiv.org/abs/2510.17700

https://elastic.ashita.nl},

year = {2025},

date = {2025-12-02},

urldate = {2025-12-02},

booktitle = {NeurIPS},

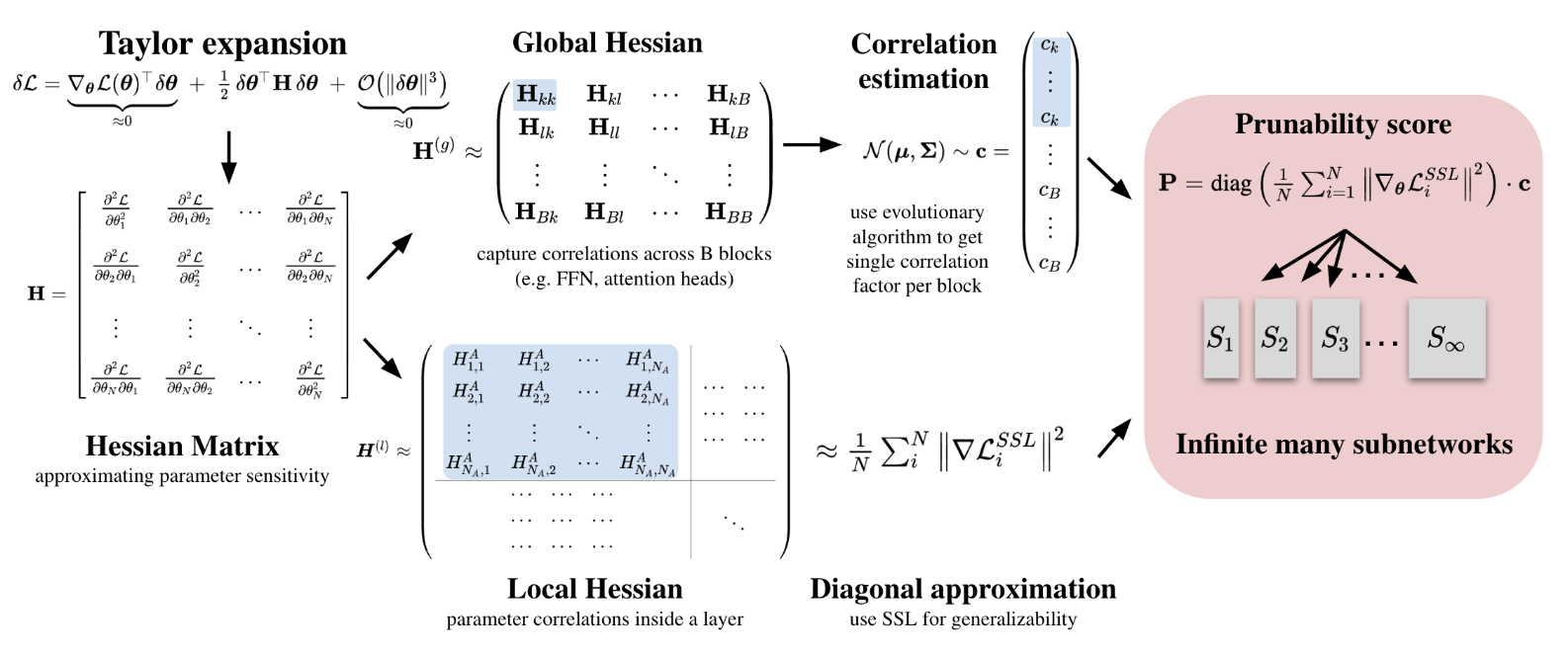

abstract = {Vision foundation models achieve remarkable performance but are only available in a limited set of pre-determined sizes, forcing sub-optimal deployment choices under real-world constraints. We introduce SnapViT: Single-shot network approximation for pruned Vision Transformers, a new post-pretraining structured pruning method that enables elastic inference across a continuum of compute budgets. Our approach efficiently combines gradient information with cross-network structure correlations, approximated via an evolutionary algorithm, does not require labeled data, generalizes to models without a classification head, and is retraining-free. Experiments on DINO, SigLIPv2, DeIT, and AugReg models demonstrate superior performance over state-of-the-art methods across various sparsities, requiring less than five minutes on a single A100 GPU to generate elastic models that can be adjusted to any computational budget. Our key contributions include an efficient pruning strategy for pretrained Vision Transformers, a novel evolutionary approximation of Hessian off-diagonal structures, and a self-supervised importance scoring mechanism that maintains strong performance without requiring retraining or labels.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Vision foundation models achieve remarkable performance but are only available in a limited set of pre-determined sizes, forcing sub-optimal deployment choices under real-world constraints. We introduce SnapViT: Single-shot network approximation for pruned Vision Transformers, a new post-pretraining structured pruning method that enables elastic inference across a continuum of compute budgets. Our approach efficiently combines gradient information with cross-network structure correlations, approximated via an evolutionary algorithm, does not require labeled data, generalizes to models without a classification head, and is retraining-free. Experiments on DINO, SigLIPv2, DeIT, and AugReg models demonstrate superior performance over state-of-the-art methods across various sparsities, requiring less than five minutes on a single A100 GPU to generate elastic models that can be adjusted to any computational budget. Our key contributions include an efficient pruning strategy for pretrained Vision Transformers, a novel evolutionary approximation of Hessian off-diagonal structures, and a self-supervised importance scoring mechanism that maintains strong performance without requiring retraining or labels. |

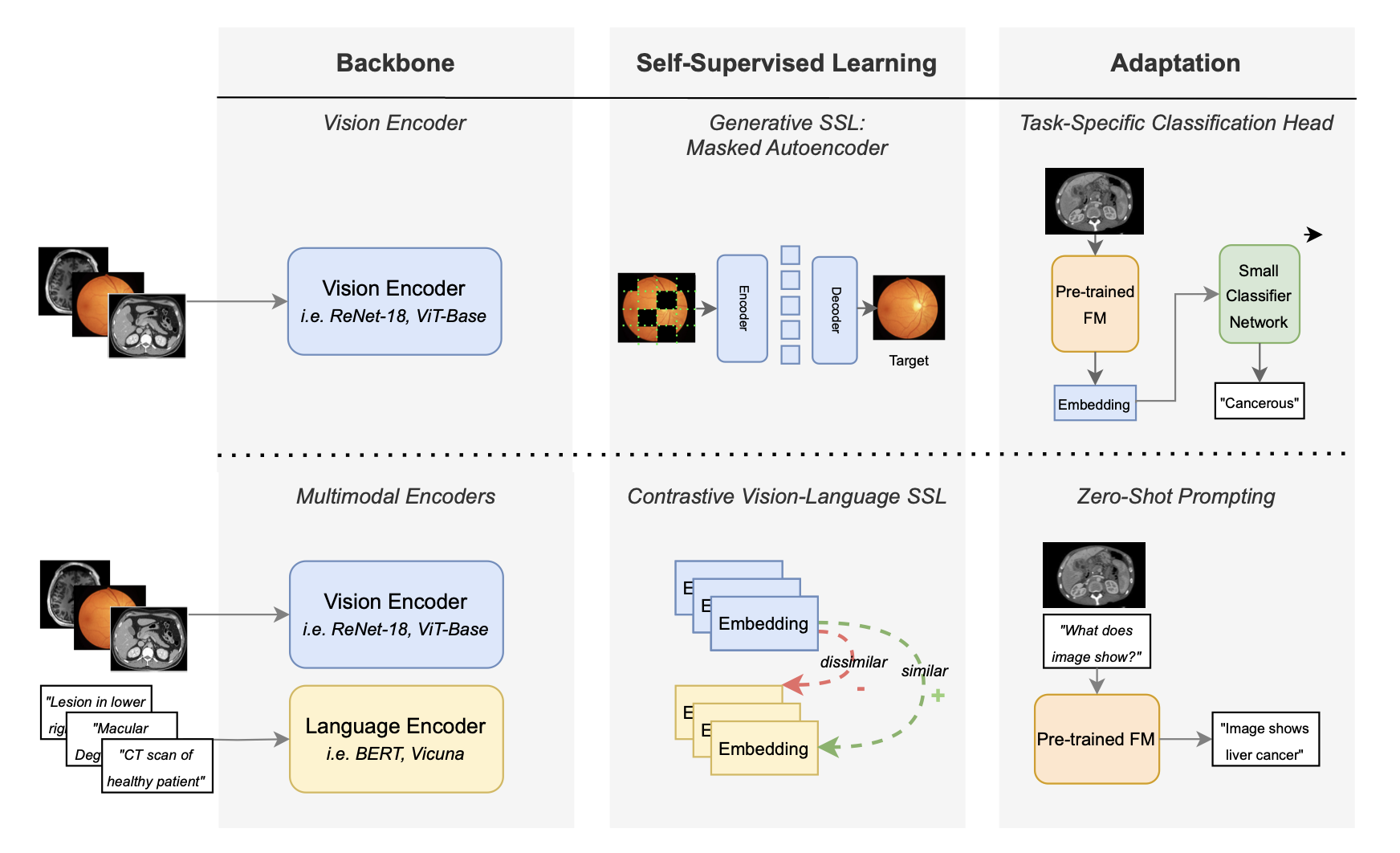

| Tim Veenboer, George Yiasemis, Eric Marcus, Vivien van Veldhuizen, Cees G M Snoek, Jonas Teuwen, Kevin B. W. Groot Lipman: TAP-CT: 3D Task-Agnostic Pretraining of Computed Tomography Foundation Models. arXiv:2512.00872, 2025. @unpublished{VeenboerArxiv2025,

title = {TAP-CT: 3D Task-Agnostic Pretraining of Computed Tomography Foundation Models},

author = {Tim Veenboer and George Yiasemis and Eric Marcus and Vivien van Veldhuizen and Cees G M Snoek and Jonas Teuwen and Kevin B. W. Groot Lipman},

url = {https://huggingface.co/fomofo/tap-ct-b-3d

https://arxiv.org/abs/2512.00872},

year = {2025},

date = {2025-11-30},

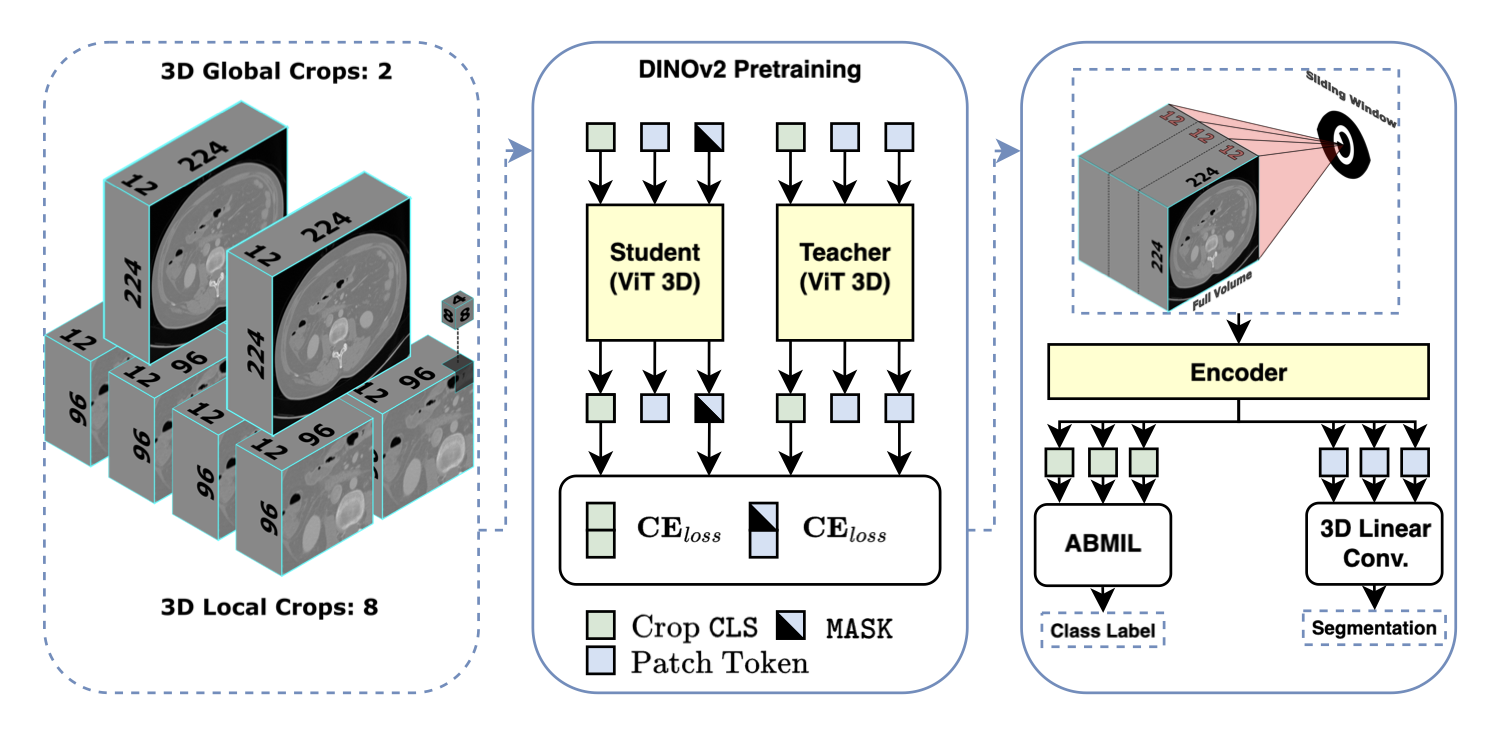

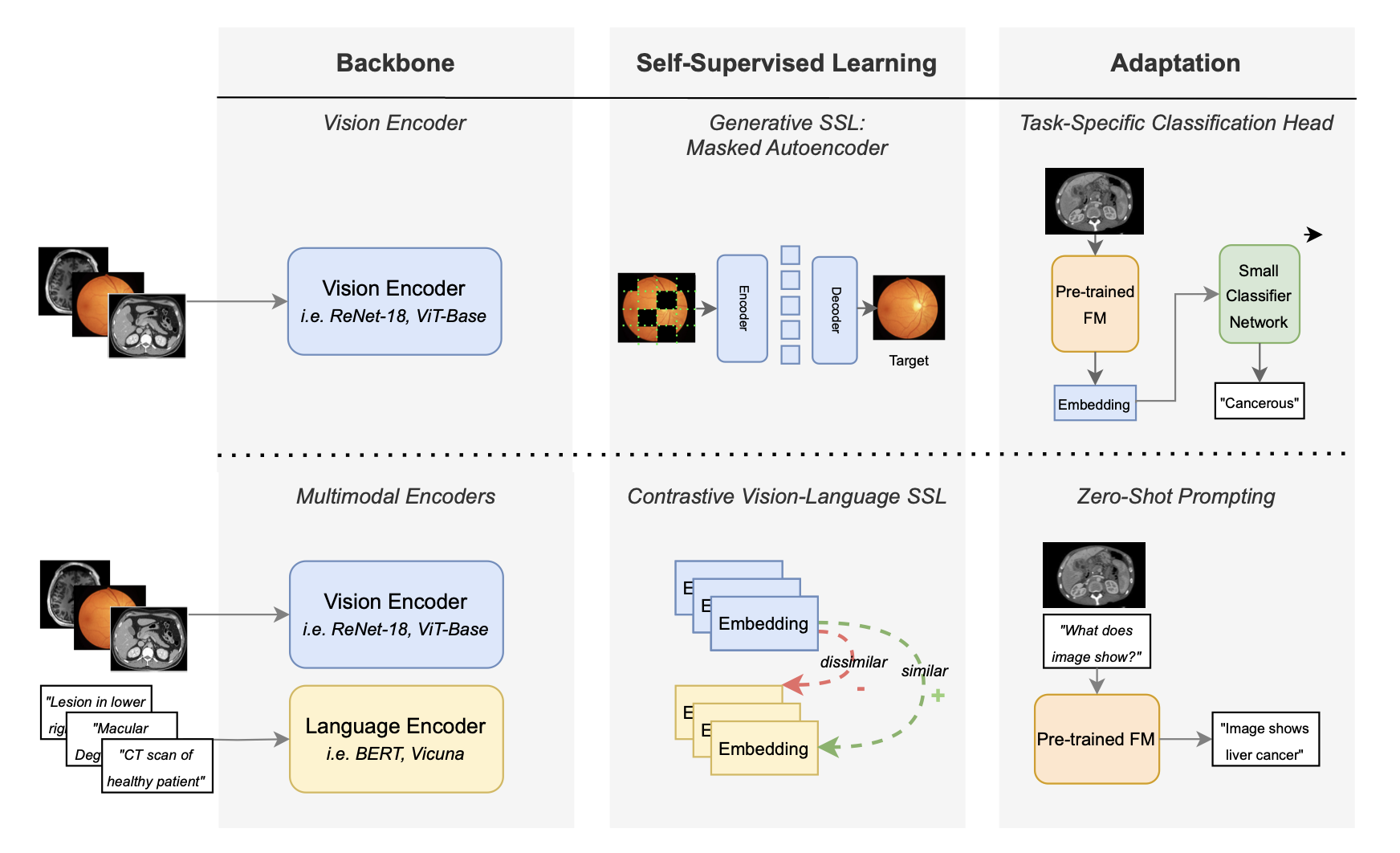

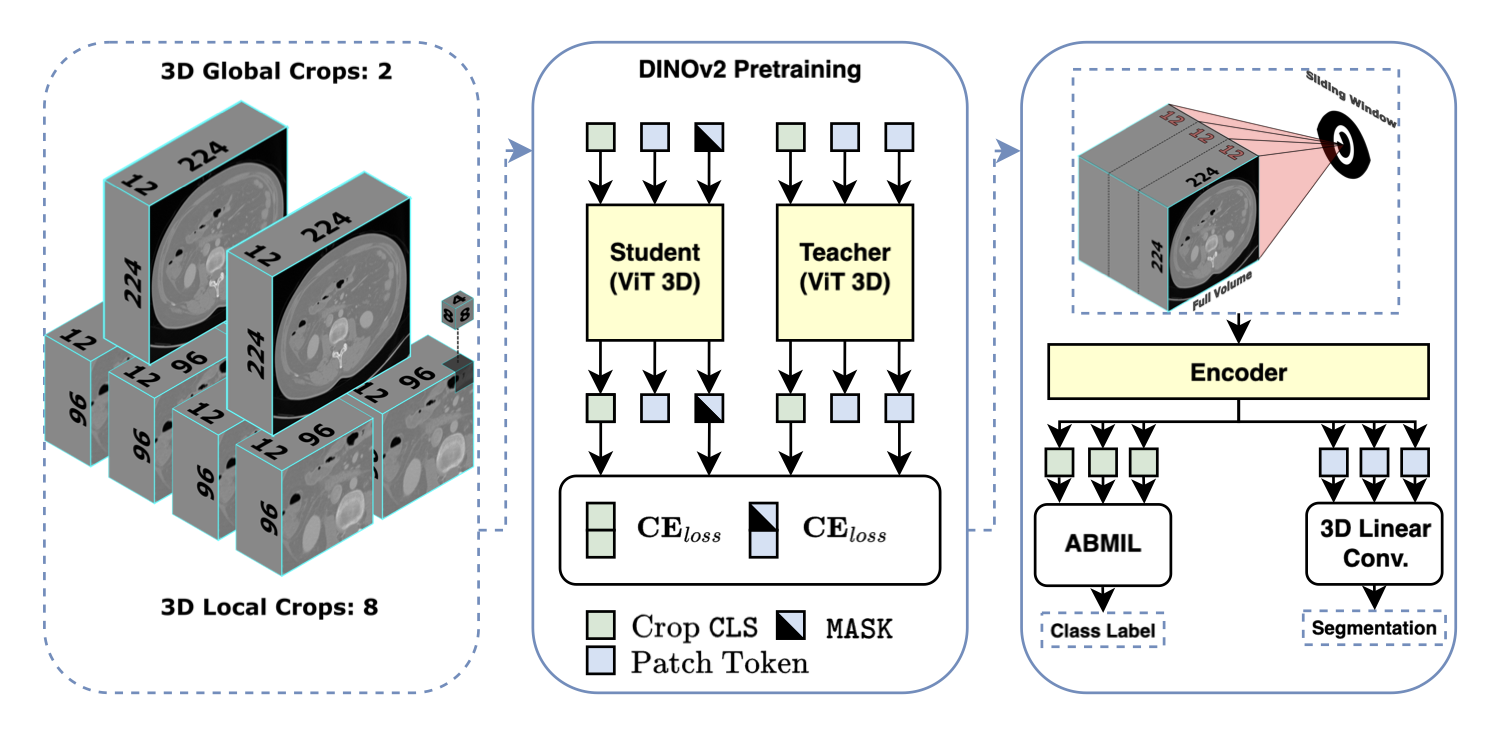

abstract = {Existing foundation models (FMs) in the medical domain often require extensive fine-tuning or rely on training resource-intensive decoders, while many existing encoders are pretrained with objectives biased toward specific tasks. This illustrates a need for a strong, task-agnostic foundation model that requires minimal fine-tuning beyond feature extraction. In this work, we introduce a suite of task-agnostic pretraining of CT foundation models (TAP-CT): a simple yet effective adaptation of Vision Transformers (ViTs) and DINOv2 for volumetric data, enabling scalable self-supervised pretraining directly on 3D CT volumes. Our approach incorporates targeted modifications to patch embeddings, positional encodings, and volumetric augmentations, making the architecture depth-aware while preserving the simplicity of the underlying architectures. We show that large-scale 3D pretraining on an extensive in-house CT dataset (105K volumes) yields stable, robust frozen representations that generalize strongly across downstream tasks. To promote transparency and reproducibility, and to establish a powerful, low-resource baseline for future research in medical imaging, we will release all pretrained models, experimental configurations, and downstream benchmark code.},

howpublished = {arXiv:2512.00872},

keywords = {},

pubstate = {published},

tppubtype = {unpublished}

}

Existing foundation models (FMs) in the medical domain often require extensive fine-tuning or rely on training resource-intensive decoders, while many existing encoders are pretrained with objectives biased toward specific tasks. This illustrates a need for a strong, task-agnostic foundation model that requires minimal fine-tuning beyond feature extraction. In this work, we introduce a suite of task-agnostic pretraining of CT foundation models (TAP-CT): a simple yet effective adaptation of Vision Transformers (ViTs) and DINOv2 for volumetric data, enabling scalable self-supervised pretraining directly on 3D CT volumes. Our approach incorporates targeted modifications to patch embeddings, positional encodings, and volumetric augmentations, making the architecture depth-aware while preserving the simplicity of the underlying architectures. We show that large-scale 3D pretraining on an extensive in-house CT dataset (105K volumes) yields stable, robust frozen representations that generalize strongly across downstream tasks. To promote transparency and reproducibility, and to establish a powerful, low-resource baseline for future research in medical imaging, we will release all pretrained models, experimental configurations, and downstream benchmark code. |

| Daniel Cores, Michael Dorkenwald, Manuel Mucientes, Cees G M Snoek, Yuki M Asano: Lost in Time: A New Temporal Benchmark for VideoLLMs. In: BMVC, 2025. @inproceedings{CoresBMVC2025,

title = {Lost in Time: A New Temporal Benchmark for VideoLLMs},

author = {Daniel Cores and Michael Dorkenwald and Manuel Mucientes and Cees G M Snoek and Yuki M Asano},

url = {https://arxiv.org/abs/2410.07752},

year = {2025},

date = {2025-11-24},

urldate = {2025-11-24},

booktitle = {BMVC},

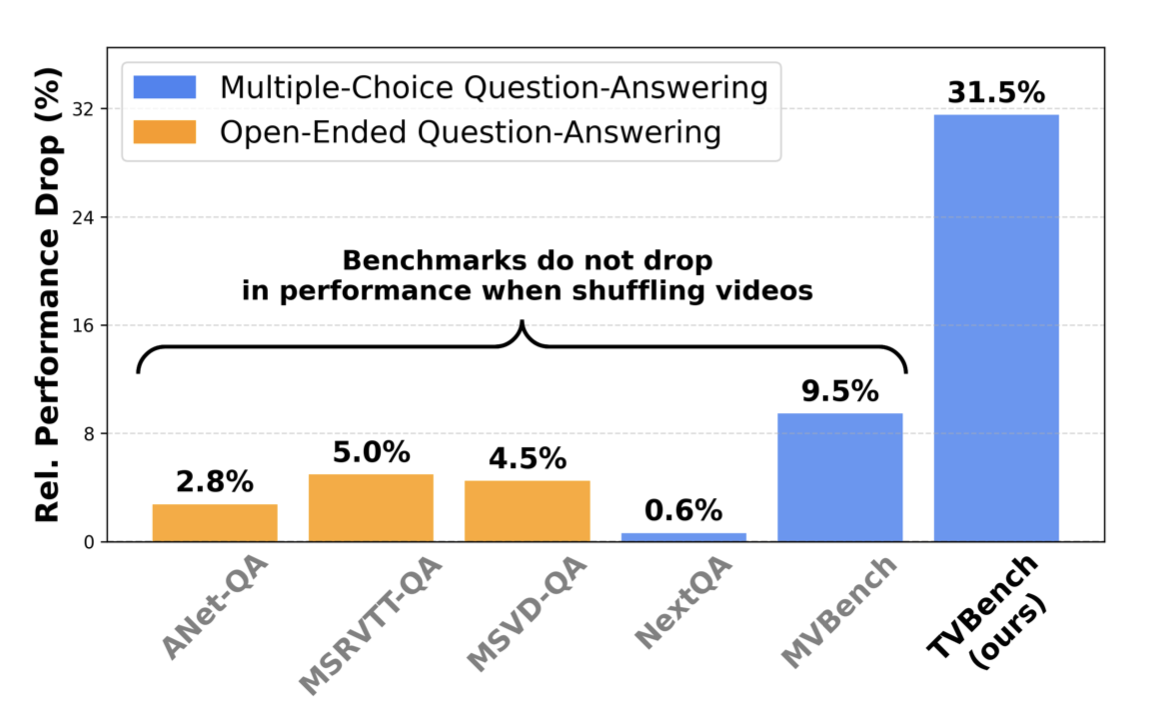

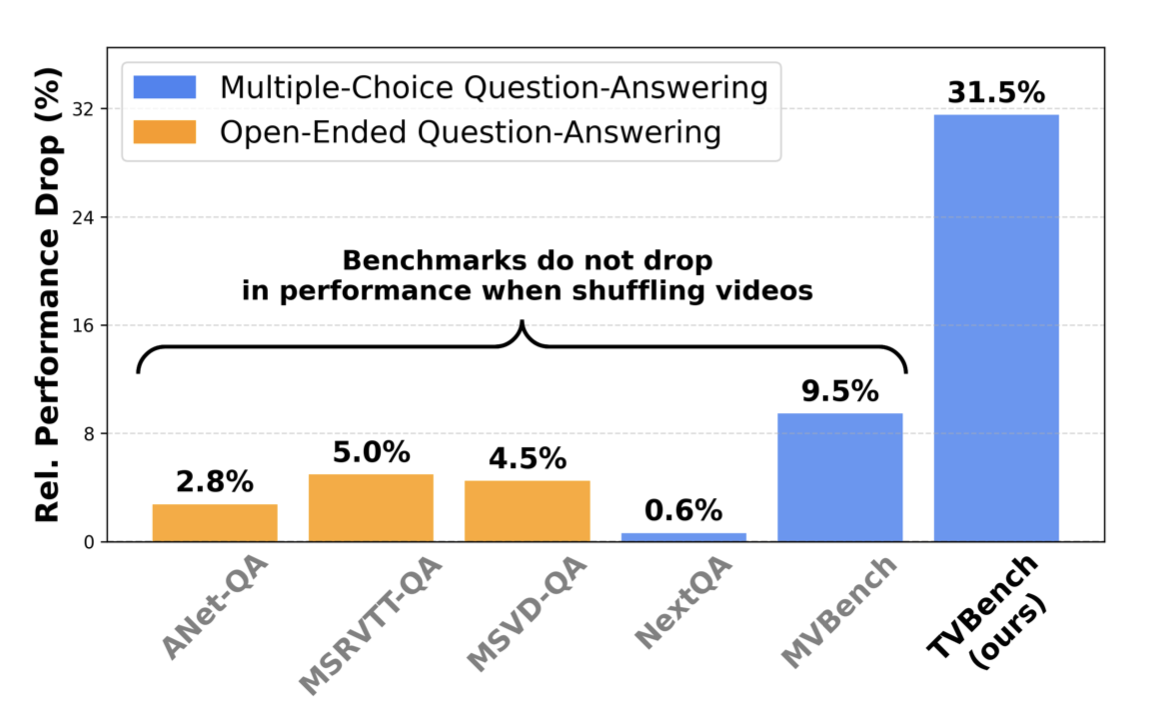

abstract = {Large language models have demonstrated impressive performance when integrated with vision models even enabling video understanding. However, evaluating video models presents its own unique challenges, for which several benchmarks have been proposed. In this paper, we show that the currently most used video-language benchmarks can be solved without requiring much temporal reasoning. We identified three main issues in existing datasets: (i) static information from single frames is often sufficient to solve the tasks (ii) the text of the questions and candidate answers is overly informative, allowing models to answer correctly without relying on any visual input (iii) world knowledge alone can answer many of the questions, making the benchmarks a test of knowledge replication rather than video reasoning. In addition, we found that open-ended question-answering benchmarks for video understanding suffer from similar issues while the automatic evaluation process with LLMs is unreliable, making it an unsuitable alternative. As a solution, we propose TVBench, a novel open-source video multiple-choice question-answering benchmark, and demonstrate through extensive evaluations that it requires a high level of temporal understanding. Surprisingly, we find that many recent video-language models perform similarly to random performance on TVBench, with only a few models such as Aria, Qwen2-VL, and Tarsier surpassing this baseline.},

howpublished = {arXiv:2410.07752},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Large language models have demonstrated impressive performance when integrated with vision models even enabling video understanding. However, evaluating video models presents its own unique challenges, for which several benchmarks have been proposed. In this paper, we show that the currently most used video-language benchmarks can be solved without requiring much temporal reasoning. We identified three main issues in existing datasets: (i) static information from single frames is often sufficient to solve the tasks (ii) the text of the questions and candidate answers is overly informative, allowing models to answer correctly without relying on any visual input (iii) world knowledge alone can answer many of the questions, making the benchmarks a test of knowledge replication rather than video reasoning. In addition, we found that open-ended question-answering benchmarks for video understanding suffer from similar issues while the automatic evaluation process with LLMs is unreliable, making it an unsuitable alternative. As a solution, we propose TVBench, a novel open-source video multiple-choice question-answering benchmark, and demonstrate through extensive evaluations that it requires a high level of temporal understanding. Surprisingly, we find that many recent video-language models perform similarly to random performance on TVBench, with only a few models such as Aria, Qwen2-VL, and Tarsier surpassing this baseline. |

| Aritra Bhowmik, Mohammad Mahdi Derakhshani, Dennis Koelma, Yuki M Asano, Martin R Oswald, Cees G M Snoek: TWIST & SCOUT: Grounding Multimodal LLM-Experts by Forget-Free Tuning. In: ICCV, 2025. @inproceedings{BhowmikICCV2025,

title = {TWIST & SCOUT: Grounding Multimodal LLM-Experts by Forget-Free Tuning},

author = {Aritra Bhowmik and Mohammad Mahdi Derakhshani and Dennis Koelma and Yuki M Asano and Martin R Oswald and Cees G M Snoek},

url = {https://arxiv.org/abs/2410.10491},

year = {2025},

date = {2025-10-19},

urldate = {2025-03-20},

booktitle = {ICCV},

abstract = {Spatial awareness is key to enable embodied multimodal AI systems. Yet, without vast amounts of spatial supervision, current Multimodal Large Language Models (MLLMs) struggle at this task. In this paper, we introduce TWIST & SCOUT, a framework that equips pre-trained MLLMs with visual grounding ability without forgetting their existing image and language understanding skills. To this end, we propose TWIST, a twin-expert stepwise tuning module that modifies the decoder of the language model using one frozen module pre-trained on image understanding tasks and another learnable one for visual grounding tasks. This allows the MLLM to retain previously learned knowledge and skills, while acquiring what is missing. To fine-tune the model effectively, we generate a high-quality synthetic dataset we call SCOUT, which mimics human reasoning in visual grounding. This dataset provides rich supervision signals, describing a step-by-step multimodal reasoning process, thereby simplifying the task of visual grounding. We evaluate our approach on several standard benchmark datasets, encompassing grounded image captioning, zero-shot localization, and visual grounding tasks. Our method consistently delivers strong performance across all tasks, while retaining the pre-trained image understanding capabilities.},

howpublished = {arXiv:2410.10491},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Spatial awareness is key to enable embodied multimodal AI systems. Yet, without vast amounts of spatial supervision, current Multimodal Large Language Models (MLLMs) struggle at this task. In this paper, we introduce TWIST & SCOUT, a framework that equips pre-trained MLLMs with visual grounding ability without forgetting their existing image and language understanding skills. To this end, we propose TWIST, a twin-expert stepwise tuning module that modifies the decoder of the language model using one frozen module pre-trained on image understanding tasks and another learnable one for visual grounding tasks. This allows the MLLM to retain previously learned knowledge and skills, while acquiring what is missing. To fine-tune the model effectively, we generate a high-quality synthetic dataset we call SCOUT, which mimics human reasoning in visual grounding. This dataset provides rich supervision signals, describing a step-by-step multimodal reasoning process, thereby simplifying the task of visual grounding. We evaluate our approach on several standard benchmark datasets, encompassing grounded image captioning, zero-shot localization, and visual grounding tasks. Our method consistently delivers strong performance across all tasks, while retaining the pre-trained image understanding capabilities. |

| Mohammadreza Salehi, Shashanka Venkataramanan, Ioana Simion, Efstratios Gavves, Cees G M Snoek, Yuki M Asano: MoSiC: Optimal-Transport Motion Trajectory for Dense Self-Supervised Learning. In: ICCV, 2025. @inproceedings{SalehiICCV2025,

title = {MoSiC: Optimal-Transport Motion Trajectory for Dense Self-Supervised Learning},

author = {Mohammadreza Salehi and Shashanka Venkataramanan and Ioana Simion and Efstratios Gavves and Cees G M Snoek and Yuki M Asano},

url = {https://arxiv.org/abs/2506.08694

https://github.com/SMSD75/MoSiC/tree/main},

year = {2025},

date = {2025-10-19},

urldate = {2025-10-19},

booktitle = {ICCV},

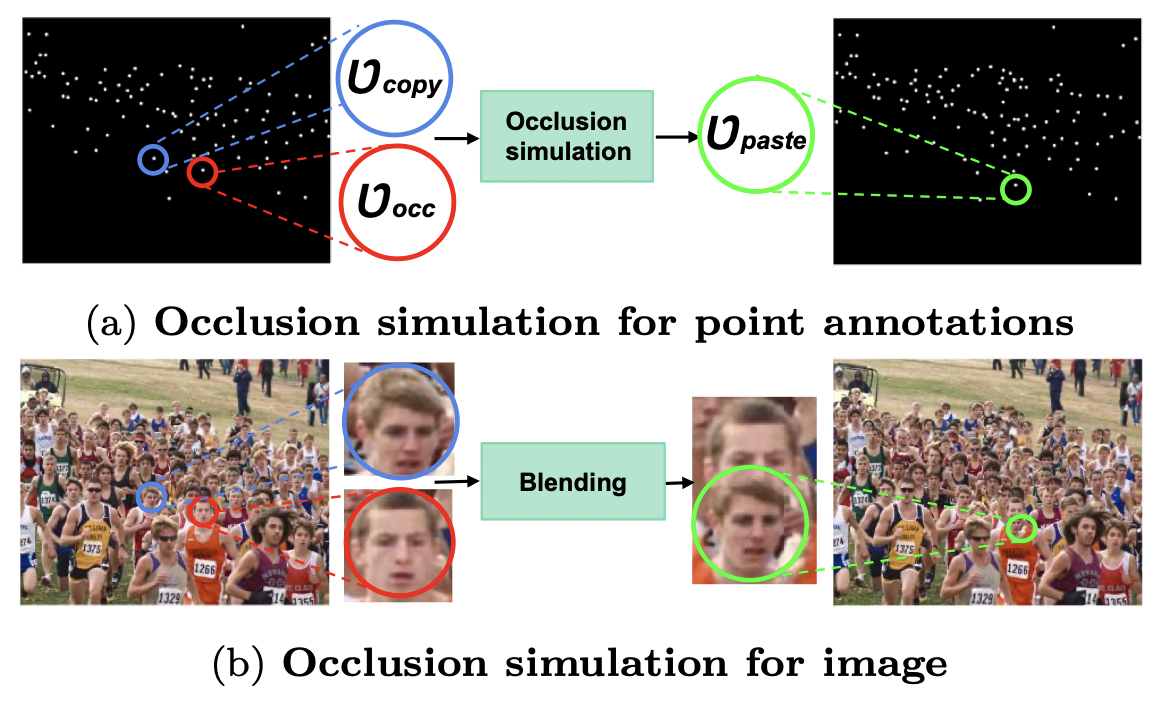

abstract = {Dense self-supervised learning has shown great promise for learning pixel- and patch-level representations, but extending it to videos remains challenging due to the complexity of motion dynamics. Existing approaches struggle as they rely on static augmentations that fail under object deformations, occlusions, and camera movement, leading to inconsistent feature learning over time. We propose a motion-guided self-supervised learning framework that clusters dense point tracks to learn spatiotemporally consistent representations. By leveraging an off-the-shelf point tracker, we extract long-range motion trajectories and optimize feature clustering through a momentum-encoder-based optimal transport mechanism. To ensure temporal coherence, we propagate cluster assignments along tracked points, enforcing feature consistency across views despite viewpoint changes. Integrating motion as an implicit supervisory signal, our method learns representations that generalize across frames, improving robustness in dynamic scenes and challenging occlusion scenarios. By initializing from strong image-pretrained models and leveraging video data for training, we improve state-of-the-art by 1% to 6% on six image and video datasets and four evaluation benchmarks.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Dense self-supervised learning has shown great promise for learning pixel- and patch-level representations, but extending it to videos remains challenging due to the complexity of motion dynamics. Existing approaches struggle as they rely on static augmentations that fail under object deformations, occlusions, and camera movement, leading to inconsistent feature learning over time. We propose a motion-guided self-supervised learning framework that clusters dense point tracks to learn spatiotemporally consistent representations. By leveraging an off-the-shelf point tracker, we extract long-range motion trajectories and optimize feature clustering through a momentum-encoder-based optimal transport mechanism. To ensure temporal coherence, we propagate cluster assignments along tracked points, enforcing feature consistency across views despite viewpoint changes. Integrating motion as an implicit supervisory signal, our method learns representations that generalize across frames, improving robustness in dynamic scenes and challenging occlusion scenarios. By initializing from strong image-pretrained models and leveraging video data for training, we improve state-of-the-art by 1% to 6% on six image and video datasets and four evaluation benchmarks. |

| Vladimir Yugay, Duy-Kien Nguyen, Theo Gevers, Cees G M Snoek, Martin R Oswald: Visual Odometry with Transformers. arXiv:2510.03348, 2025. @unpublished{YugayArxiv2025,

title = {Visual Odometry with Transformers},

author = {Vladimir Yugay and Duy-Kien Nguyen and Theo Gevers and Cees G M Snoek and Martin R Oswald},

url = {https://arxiv.org/abs/2510.03348

https://vladimiryugay.github.io/vot/},

year = {2025},

date = {2025-10-02},

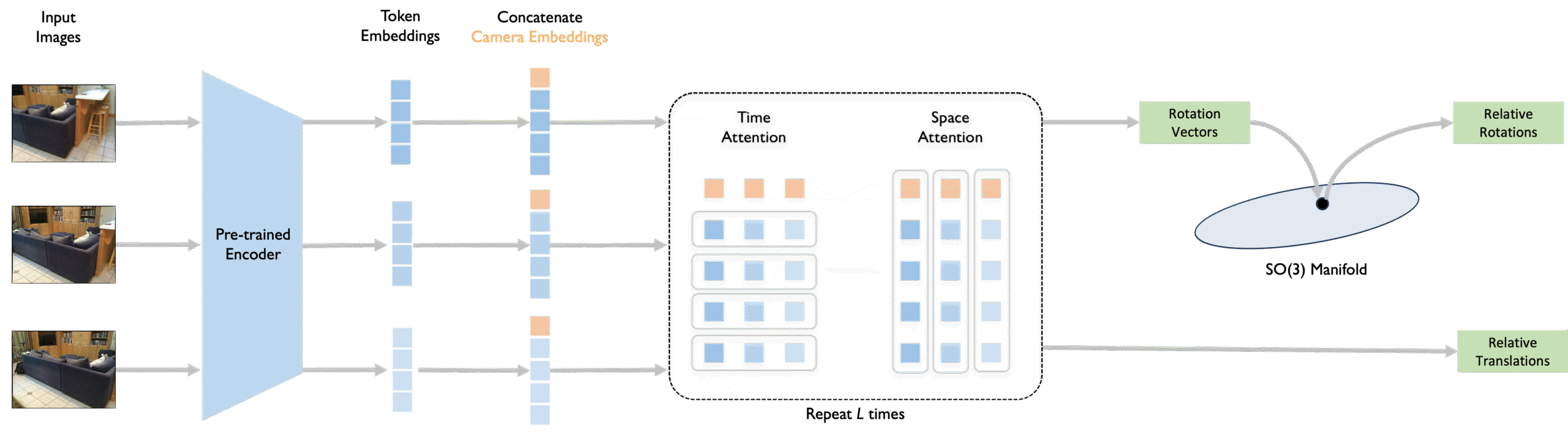

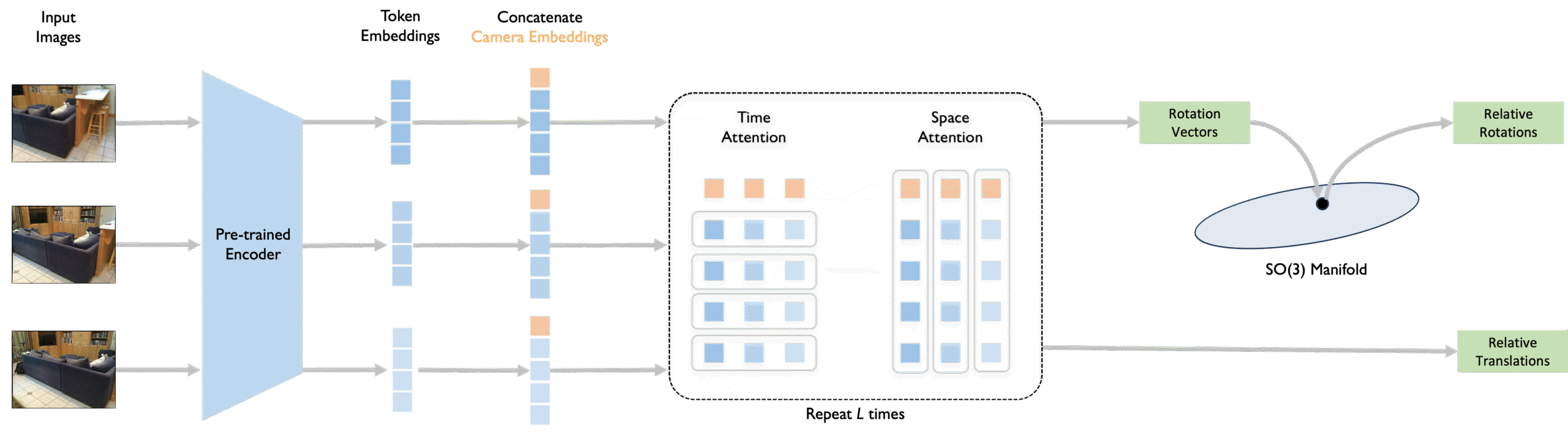

abstract = {Modern monocular visual odometry methods typically combine pre-trained deep learning components with optimization modules, resulting in complex pipelines that rely heavily on camera calibration and hyperparameter tuning, and often struggle in unseen real-world scenarios. Recent large-scale 3D models trained on massive amounts of multi-modal data have partially alleviated these challenges, providing generalizable dense reconstruction and camera pose estimation. Still, they remain limited in handling long videos and providing accurate per-frame estimates, which are required for visual odometry. In this work, we demonstrate that monocular visual odometry can be addressed effectively in an end-to-end manner, thereby eliminating the need for handcrafted components such as bundle adjustment, feature matching, camera calibration, or dense 3D reconstruction. We introduce VoT, short for Visual odometry Transformer, which processes sequences of monocular frames by extracting features and modeling global relationships through temporal and spatial attention. Unlike prior methods, VoT directly predicts camera motion without estimating dense geometry and relies solely on camera poses for supervision. The framework is modular and flexible, allowing seamless integration of various pre-trained encoders as feature extractors. Experimental results demonstrate that VoT scales effectively with larger datasets, benefits substantially from stronger pre-trained backbones, generalizes across diverse camera motions and calibration settings, and outperforms traditional methods while running more than 3 times faster. },

howpublished = {arXiv:2510.03348},

keywords = {},

pubstate = {published},

tppubtype = {unpublished}

}

Modern monocular visual odometry methods typically combine pre-trained deep learning components with optimization modules, resulting in complex pipelines that rely heavily on camera calibration and hyperparameter tuning, and often struggle in unseen real-world scenarios. Recent large-scale 3D models trained on massive amounts of multi-modal data have partially alleviated these challenges, providing generalizable dense reconstruction and camera pose estimation. Still, they remain limited in handling long videos and providing accurate per-frame estimates, which are required for visual odometry. In this work, we demonstrate that monocular visual odometry can be addressed effectively in an end-to-end manner, thereby eliminating the need for handcrafted components such as bundle adjustment, feature matching, camera calibration, or dense 3D reconstruction. We introduce VoT, short for Visual odometry Transformer, which processes sequences of monocular frames by extracting features and modeling global relationships through temporal and spatial attention. Unlike prior methods, VoT directly predicts camera motion without estimating dense geometry and relies solely on camera poses for supervision. The framework is modular and flexible, allowing seamless integration of various pre-trained encoders as feature extractors. Experimental results demonstrate that VoT scales effectively with larger datasets, benefits substantially from stronger pre-trained backbones, generalizes across diverse camera motions and calibration settings, and outperforms traditional methods while running more than 3 times faster. |

| Ana Manzano Rodriguez, Cees G M Snoek, Marlies P Schijven: Bridging the Gap: Exposing the Hidden Challenges Towards Adoption of Artificial Intelligence in Surgery. In: BJS, vol. 112, iss. 11, 2025. @article{RodriguezBJS25,

title = {Bridging the Gap: Exposing the Hidden Challenges Towards Adoption of Artificial Intelligence in Surgery},

author = {Ana Manzano Rodriguez and Cees G M Snoek and Marlies P Schijven},

url = {https://doi.org/10.1093/bjs/znaf217},

year = {2025},

date = {2025-09-09},

urldate = {2025-09-09},

journal = {BJS},

volume = {112},

issue = {11},

abstract = {Bridging the gap between AI research and surgery is essential for reaping the benefits AI can bring to surgical practice. The path forward is clear: fostering better collaboration between these very different fields of expertise. Only through collective action can surgical AI move beyond isolated studies towards meaningful advancements creating a true ecosystem. With well-defined standards, the field can evolve faster, achieving the significant advances we are all expecting. The potential is immense, but without structured cooperation, it will remain unrealized. Now is the time for our disciplines to unite, plan and deliver.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Bridging the gap between AI research and surgery is essential for reaping the benefits AI can bring to surgical practice. The path forward is clear: fostering better collaboration between these very different fields of expertise. Only through collective action can surgical AI move beyond isolated studies towards meaningful advancements creating a true ecosystem. With well-defined standards, the field can evolve faster, achieving the significant advances we are all expecting. The potential is immense, but without structured cooperation, it will remain unrealized. Now is the time for our disciplines to unite, plan and deliver. |

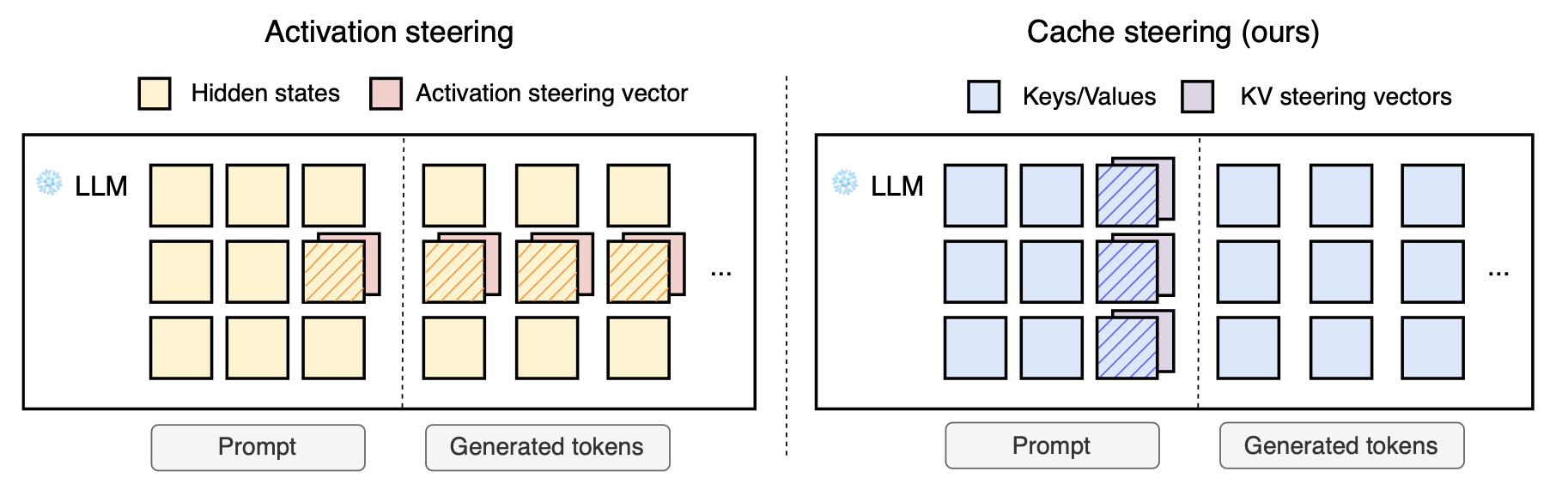

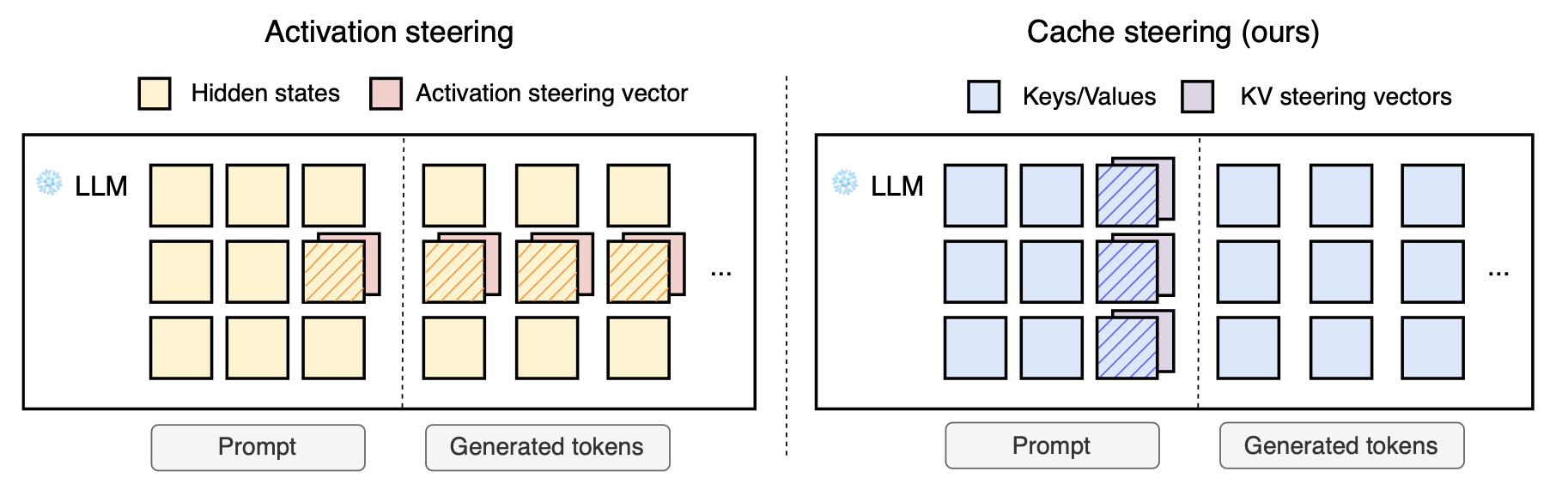

| Max Belitsky, Dawid J Kopiczko, Michael Dorkenwald, M. Jehanzeb Mirza, Cees G M Snoek, Yuki M Asano: KV Cache Steering for Controlling Frozen LLMs. arXiv:2507.08799, 2025. @unpublished{BelitskyArxiv2025,

title = {KV Cache Steering for Controlling Frozen LLMs},

author = {Max Belitsky and Dawid J Kopiczko and Michael Dorkenwald and M. Jehanzeb Mirza and Cees G M Snoek and Yuki M Asano},

url = {https://arxiv.org/abs/2507.08799},

year = {2025},

date = {2025-07-11},

urldate = {2025-07-11},

abstract = {We propose cache steering, a lightweight method for implicit steering of language models via a one-shot intervention applied directly to the key-value cache. To validate its effectiveness, we apply cache steering to induce chain-of-thought reasoning in small language models. Our approach leverages GPT-4o-generated reasoning traces to construct steering vectors that shift model behavior toward more explicit, multi-step reasoning without fine-tuning or prompt modifications. Experimental evaluations on diverse reasoning benchmarks demonstrate that cache steering improves both the qualitative structure of model reasoning and quantitative task performance. Compared to prior activation steering techniques that require continuous interventions, our one-shot cache steering offers substantial advantages in terms of hyperparameter stability, inference-time efficiency, and ease of integration, making it a more robust and practical solution for controlled generation.},

howpublished = {arXiv:2507.08799},

keywords = {},

pubstate = {published},

tppubtype = {unpublished}

}

We propose cache steering, a lightweight method for implicit steering of language models via a one-shot intervention applied directly to the key-value cache. To validate its effectiveness, we apply cache steering to induce chain-of-thought reasoning in small language models. Our approach leverages GPT-4o-generated reasoning traces to construct steering vectors that shift model behavior toward more explicit, multi-step reasoning without fine-tuning or prompt modifications. Experimental evaluations on diverse reasoning benchmarks demonstrate that cache steering improves both the qualitative structure of model reasoning and quantitative task performance. Compared to prior activation steering techniques that require continuous interventions, our one-shot cache steering offers substantial advantages in terms of hyperparameter stability, inference-time efficiency, and ease of integration, making it a more robust and practical solution for controlled generation. |

| Mohammad Mahdi Derakhshani, Dheeraj Varghese, Marzieh Fadaee, Cees G M Snoek: NeoBabel: A Multilingual Open Tower for Visual Generation. arXiv:2507.06137, 2025. @unpublished{DerakhshaniArxiv2025,

title = {NeoBabel: A Multilingual Open Tower for Visual Generation},

author = {Mohammad Mahdi Derakhshani and Dheeraj Varghese and Marzieh Fadaee and Cees G M Snoek},

url = {https://arxiv.org/abs/2507.06137

https://neo-babel.github.io},

year = {2025},

date = {2025-07-08},

urldate = {2025-07-08},

abstract = {Text-to-image generation advancements have been predominantly English-centric, creating barriers for non-English speakers and perpetuating digital inequities. While existing systems rely on translation pipelines, these introduce semantic drift, computational overhead, and cultural misalignment. We introduce NeoBabel, a novel multilingual image generation framework that sets a new Pareto frontier in performance, efficiency and inclusivity, supporting six languages: English, Chinese, Dutch, French, Hindi, and Persian. The model is trained using a combination of large-scale multilingual pretraining and high-resolution instruction tuning. To evaluate its capabilities, we expand two English-only benchmarks to multilingual equivalents: m-GenEval and m-DPG. NeoBabel achieves state-of-the-art multilingual performance while retaining strong English capability, scoring 0.75 on m-GenEval and 0.68 on m-DPG. Notably, it performs on par with leading models on English tasks while outperforming them by +0.11 and +0.09 on multilingual benchmarks, even though these models are built on multilingual base LLMs. This demonstrates the effectiveness of our targeted alignment training for preserving and extending crosslingual generalization. We further introduce two new metrics to rigorously assess multilingual alignment and robustness to code-mixed prompts. Notably, NeoBabel matches or exceeds English-only models while being 2-4x smaller. We release an open toolkit, including all code, model checkpoints, a curated dataset of 124M multilingual text-image pairs, and standardized multilingual evaluation protocols, to advance inclusive AI research. Our work demonstrates that multilingual capability is not a trade-off but a catalyst for improved robustness, efficiency, and cultural fidelity in generative AI.},

howpublished = {arXiv:2507.06137},

keywords = {},

pubstate = {published},

tppubtype = {unpublished}

}

Text-to-image generation advancements have been predominantly English-centric, creating barriers for non-English speakers and perpetuating digital inequities. While existing systems rely on translation pipelines, these introduce semantic drift, computational overhead, and cultural misalignment. We introduce NeoBabel, a novel multilingual image generation framework that sets a new Pareto frontier in performance, efficiency and inclusivity, supporting six languages: English, Chinese, Dutch, French, Hindi, and Persian. The model is trained using a combination of large-scale multilingual pretraining and high-resolution instruction tuning. To evaluate its capabilities, we expand two English-only benchmarks to multilingual equivalents: m-GenEval and m-DPG. NeoBabel achieves state-of-the-art multilingual performance while retaining strong English capability, scoring 0.75 on m-GenEval and 0.68 on m-DPG. Notably, it performs on par with leading models on English tasks while outperforming them by +0.11 and +0.09 on multilingual benchmarks, even though these models are built on multilingual base LLMs. This demonstrates the effectiveness of our targeted alignment training for preserving and extending crosslingual generalization. We further introduce two new metrics to rigorously assess multilingual alignment and robustness to code-mixed prompts. Notably, NeoBabel matches or exceeds English-only models while being 2-4x smaller. We release an open toolkit, including all code, model checkpoints, a curated dataset of 124M multilingual text-image pairs, and standardized multilingual evaluation protocols, to advance inclusive AI research. Our work demonstrates that multilingual capability is not a trade-off but a catalyst for improved robustness, efficiency, and cultural fidelity in generative AI. |

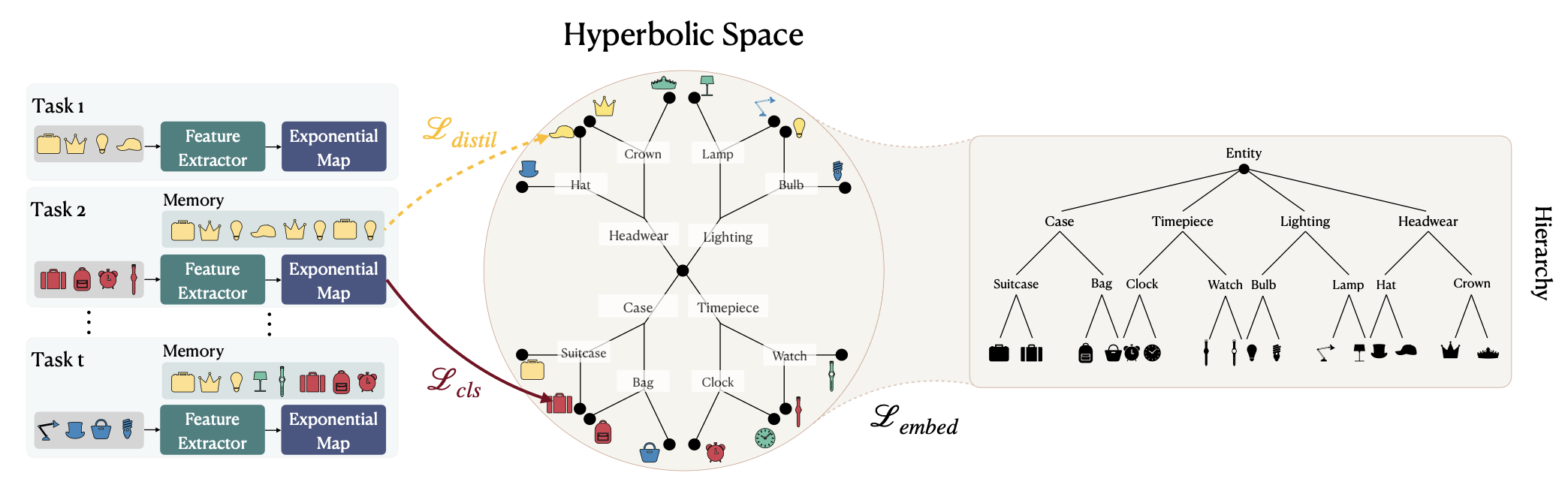

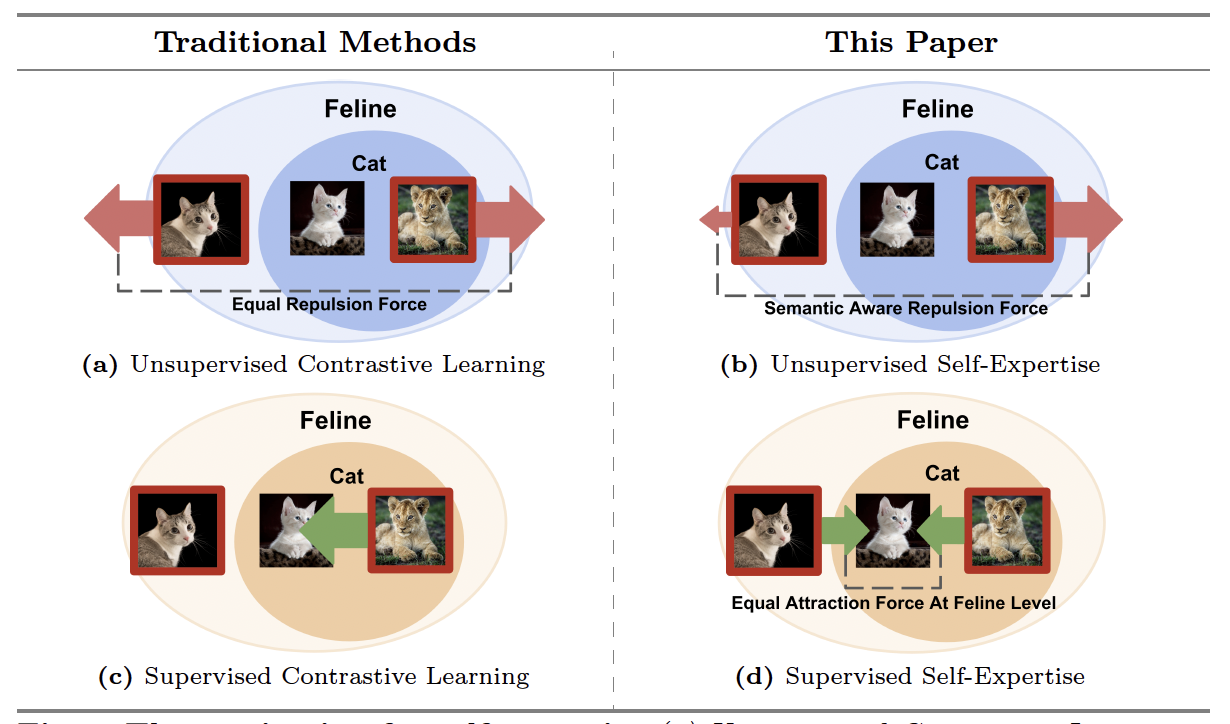

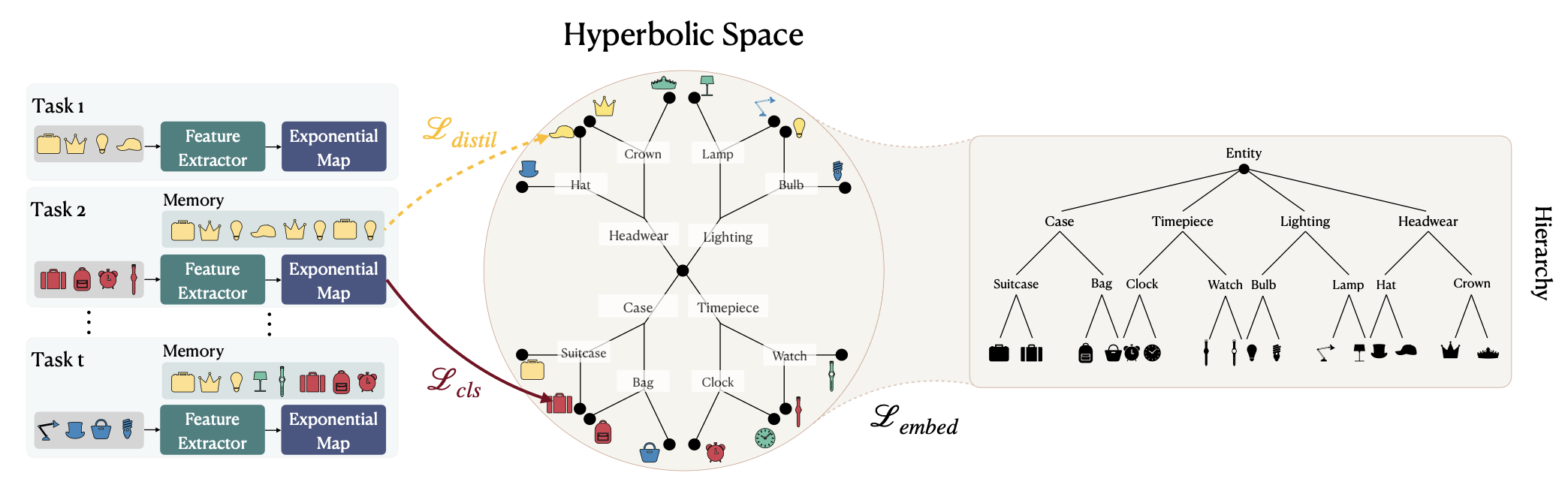

| Melika Ayoughi, Mina Ghadimi Atigh, Mohammad Mahdi Derakhshani, Cees G M Snoek, Pascal Mettes, Paul Groth: Continual Hyperbolic Learning of Instances and Classes. arXiv:2506.10710, 2025. @unpublished{ayoughiArxiv2025,

title = {Continual Hyperbolic Learning of Instances and Classes},

author = {Melika Ayoughi and Mina Ghadimi Atigh and Mohammad Mahdi Derakhshani and Cees G M Snoek and Pascal Mettes and Paul Groth},

url = {https://arxiv.org/abs/2506.10710},

year = {2025},

date = {2025-06-12},

urldate = {2025-06-12},

howpublished = {arXiv:2506.10710},

keywords = {},

pubstate = {published},

tppubtype = {unpublished}

}

|

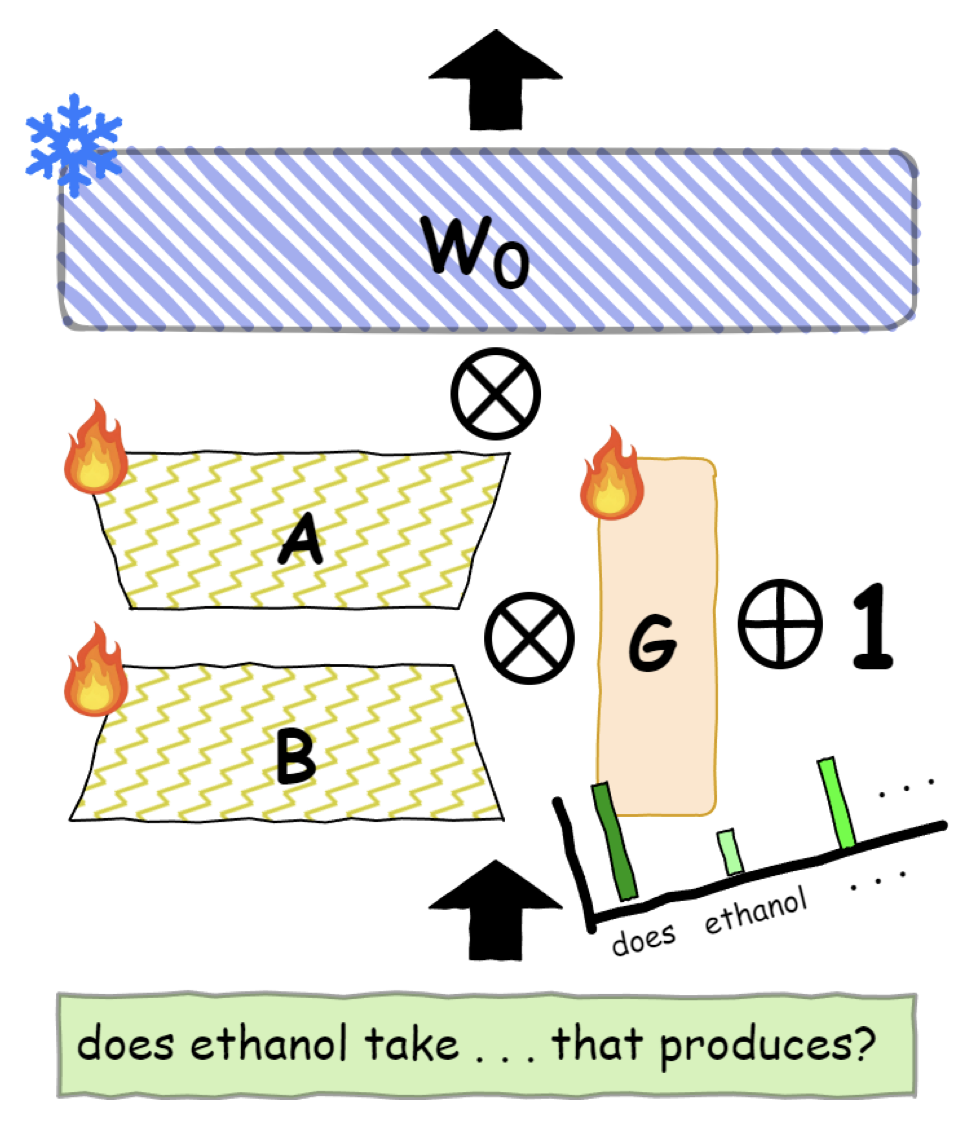

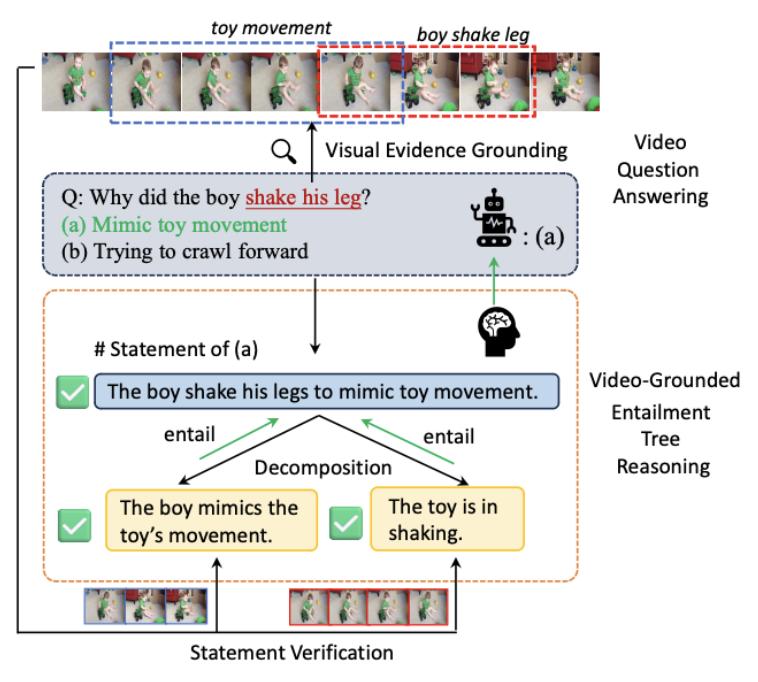

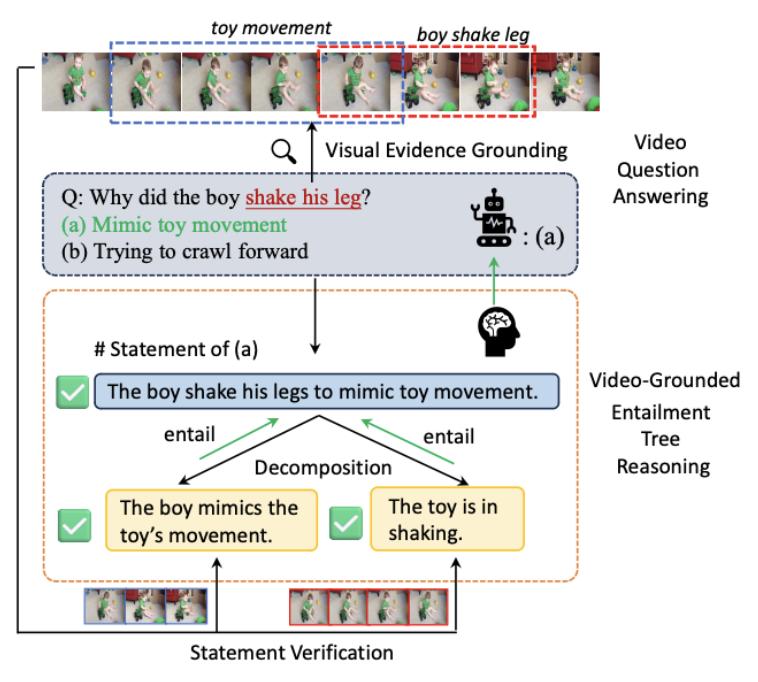

| Huabin Liu, Filip Ilievski, Cees G M Snoek: Commonsense Video Question Answering through Video-Grounded Entailment Tree Reasoning. In: CVPR, 2025. @inproceedings{LiuCVPR2025,

title = {Commonsense Video Question Answering through Video-Grounded Entailment Tree Reasoning},

author = {Huabin Liu and Filip Ilievski and Cees G M Snoek},

url = {https://arxiv.org/abs/2501.05069},

year = {2025},

date = {2025-06-11},

urldate = {2025-01-09},

booktitle = {CVPR},

abstract = {This paper proposes the first video-grounded entailment tree reasoning method for commonsense video question answering (VQA). Despite the remarkable progress of large visual-language models (VLMs), there are growing concerns that they learn spurious correlations between videos and likely answers, reinforced by their black-box nature and remaining benchmarking biases. Our method explicitly grounds VQA tasks to video fragments in four steps: entailment tree construction, video-language entailment verification, tree reasoning, and dynamic tree expansion. A vital benefit of the method is its generalizability to current video and image-based VLMs across reasoning types. To support fair evaluation, we devise a de-biasing procedure based on large-language models that rewrites VQA benchmark answer sets to enforce model reasoning. Systematic experiments on existing and de-biased benchmarks highlight the impact of our method components across benchmarks, VLMs, and reasoning types.},

howpublished = {arXiv:2501.05069},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

This paper proposes the first video-grounded entailment tree reasoning method for commonsense video question answering (VQA). Despite the remarkable progress of large visual-language models (VLMs), there are growing concerns that they learn spurious correlations between videos and likely answers, reinforced by their black-box nature and remaining benchmarking biases. Our method explicitly grounds VQA tasks to video fragments in four steps: entailment tree construction, video-language entailment verification, tree reasoning, and dynamic tree expansion. A vital benefit of the method is its generalizability to current video and image-based VLMs across reasoning types. To support fair evaluation, we devise a de-biasing procedure based on large-language models that rewrites VQA benchmark answer sets to enforce model reasoning. Systematic experiments on existing and de-biased benchmarks highlight the impact of our method components across benchmarks, VLMs, and reasoning types. |

| Vivien van Veldhuizen, Vanessa Botha, Chunyao Lu, Melis Erdal Cesur, Kevin Groot Lipman, Edwin D de Jong, Hugo Horlings, Clárisa I Sanchez, Cees G M Snoek, Lodewyk Wessels, Ritse Mann, Eric Marcus, Jonas Teuwen: Foundation Models in Medical Imaging -- A Review and Outlook. arXiv:2506.09095, 2025. @unpublished{veldhuizenArxiv2025,

title = {Foundation Models in Medical Imaging -- A Review and Outlook},

author = {Vivien van Veldhuizen and Vanessa Botha and Chunyao Lu and Melis Erdal Cesur and Kevin Groot Lipman and Edwin D de Jong and Hugo Horlings and Clárisa I Sanchez and Cees G M Snoek and Lodewyk Wessels and Ritse Mann and Eric Marcus and Jonas Teuwen},

url = {https://arxiv.org/abs/2506.09095},

year = {2025},

date = {2025-06-10},

urldate = {2025-06-10},

howpublished = {arXiv:2506.09095},

keywords = {},

pubstate = {published},

tppubtype = {unpublished}

}

|

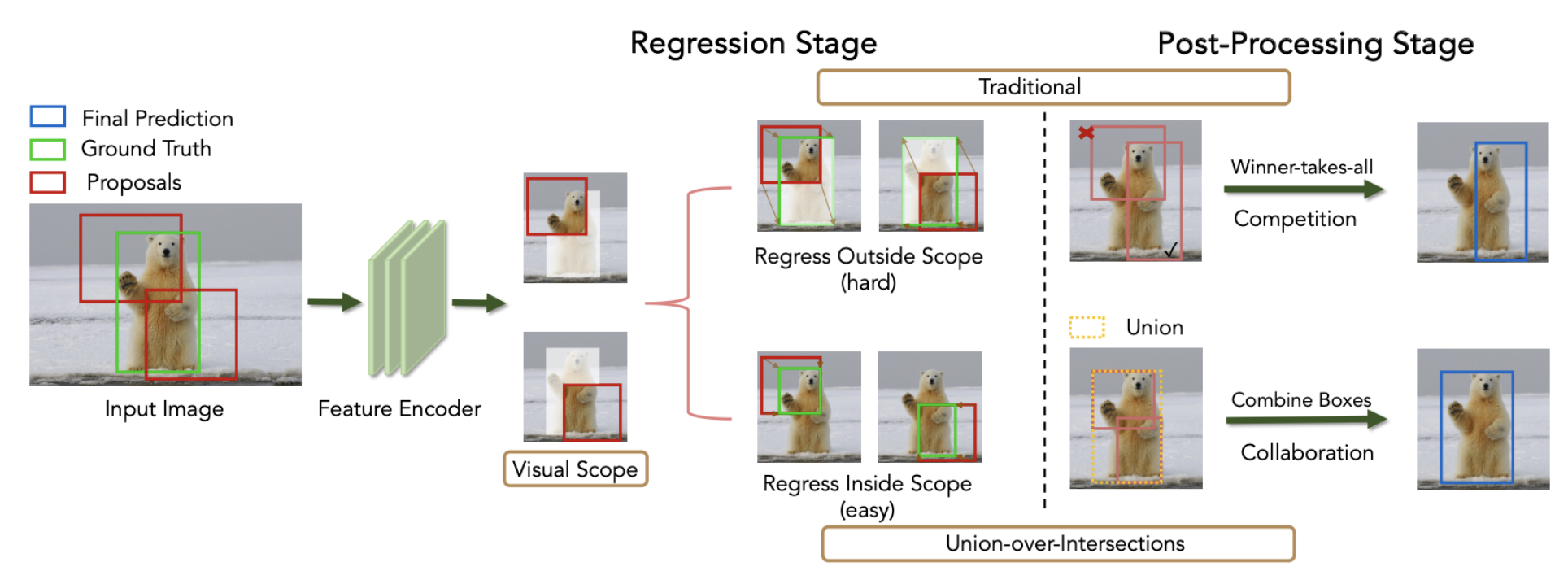

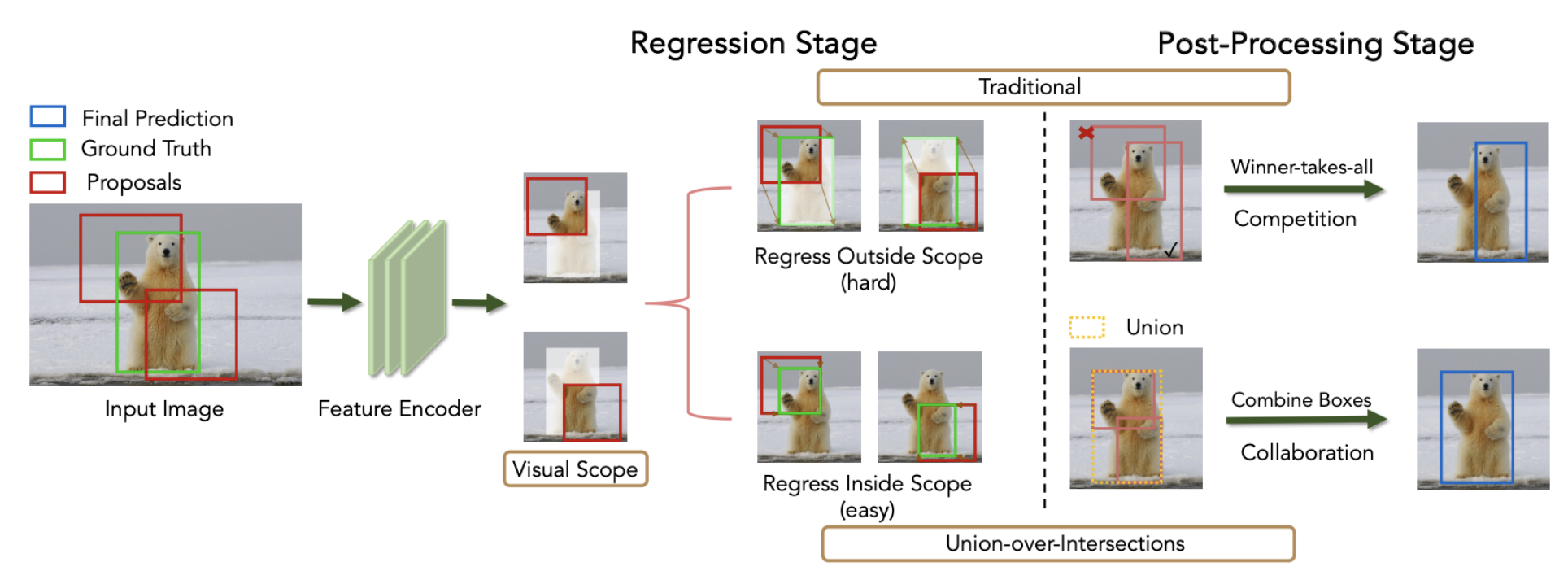

| Aritra Bhowmik, Pascal Mettes, Martin R Oswald, Cees G M Snoek: Union-over-Intersections: Object Detection beyond Winner-Takes-All. In: ICLR, 2025, (Spotlight presentation). @inproceedings{BhowmikICLR2025,

title = {Union-over-Intersections: Object Detection beyond Winner-Takes-All},

author = {Aritra Bhowmik and Pascal Mettes and Martin R Oswald and Cees G M Snoek},

url = {https://openreview.net/pdf?id=HqLHY4TzGj},

year = {2025},

date = {2025-04-24},

urldate = {2025-04-24},

booktitle = {ICLR},

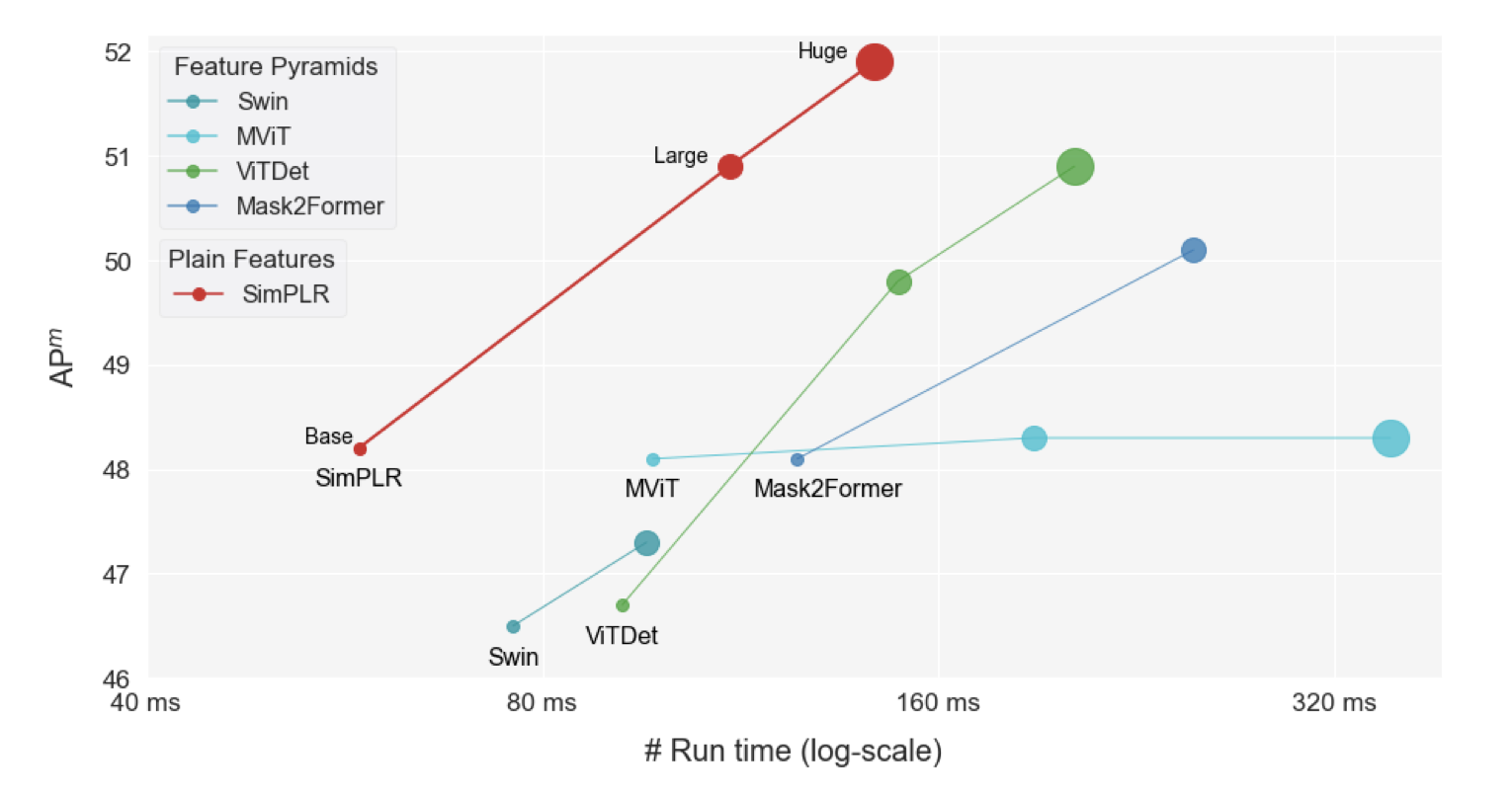

abstract = {This paper revisits the problem of predicting box locations in object detection architectures. Typically, each box proposal or box query aims to directly maximize the intersection-over-union score with the ground truth, followed by a winner-takes-all non-maximum suppression where only the highest scoring box in each region is retained. We observe that both steps are sub-optimal: the first involves regressing proposals to the entire ground truth, which is a difficult task even with large receptive fields, and the second neglects valuable information from boxes other than the top candidate. Instead of regressing proposals to the whole ground truth, we propose a simpler approach: regress only to the area of intersection between the proposal and the ground truth. This avoids the need for proposals to extrapolate beyond their visual scope, improving localization accuracy. Rather than adopting a winner-takes-all strategy, we take the union over the regressed intersections of all boxes in a region to generate the final box outputs. Our plug-and-play method integrates seamlessly into proposal-based, grid-based, and query-based detection architectures with minimal modifications, consistently improving object localization and instance segmentation. We demonstrate its broad applicability and versatility across various detection and segmentation tasks.},

howpublished = {arXiv:2311.18512},

note = {Spotlight presentation},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

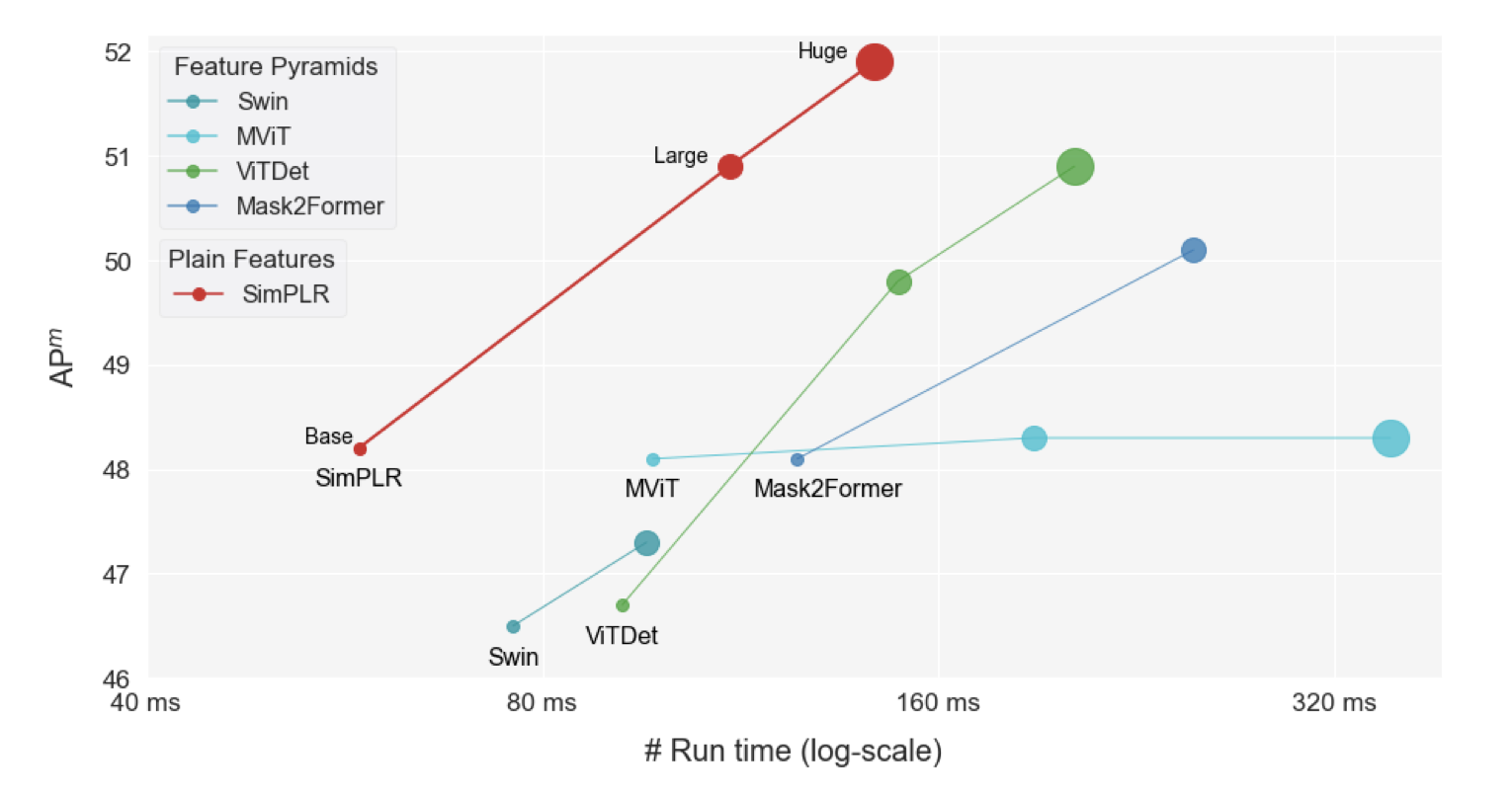

This paper revisits the problem of predicting box locations in object detection architectures. Typically, each box proposal or box query aims to directly maximize the intersection-over-union score with the ground truth, followed by a winner-takes-all non-maximum suppression where only the highest scoring box in each region is retained. We observe that both steps are sub-optimal: the first involves regressing proposals to the entire ground truth, which is a difficult task even with large receptive fields, and the second neglects valuable information from boxes other than the top candidate. Instead of regressing proposals to the whole ground truth, we propose a simpler approach: regress only to the area of intersection between the proposal and the ground truth. This avoids the need for proposals to extrapolate beyond their visual scope, improving localization accuracy. Rather than adopting a winner-takes-all strategy, we take the union over the regressed intersections of all boxes in a region to generate the final box outputs. Our plug-and-play method integrates seamlessly into proposal-based, grid-based, and query-based detection architectures with minimal modifications, consistently improving object localization and instance segmentation. We demonstrate its broad applicability and versatility across various detection and segmentation tasks. |

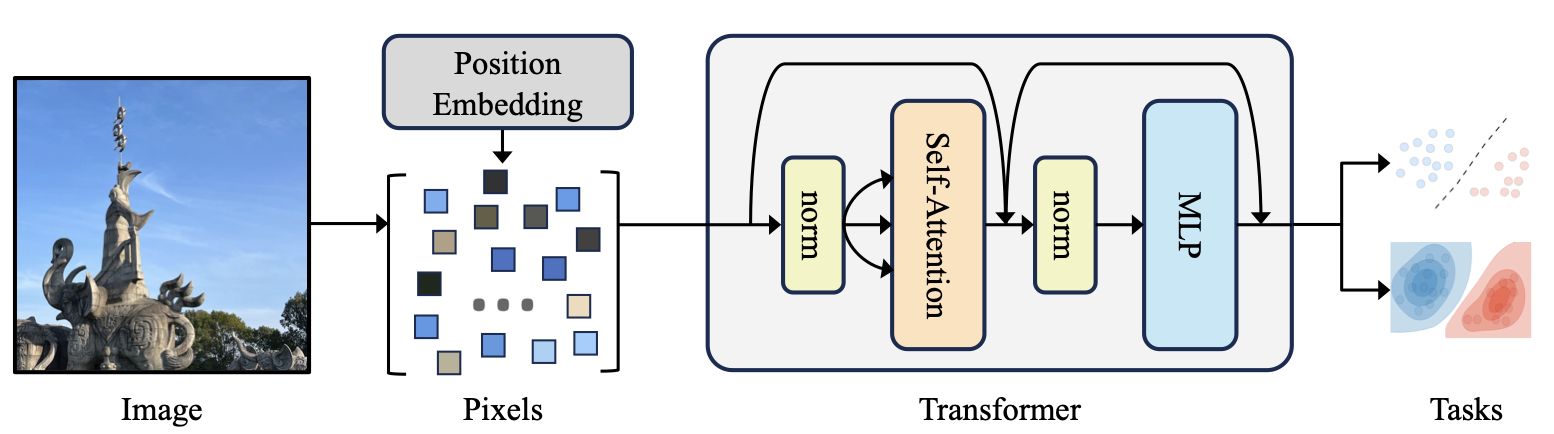

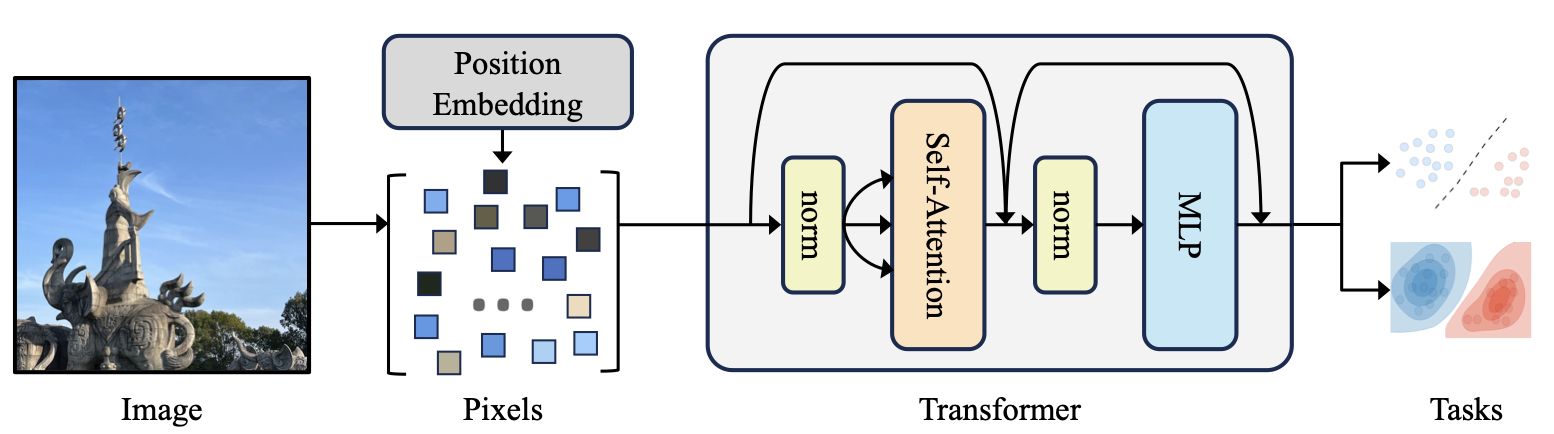

| Duy-Kien Nguyen, Mahmoud Assran, Unnat Jain, Martin R Oswald, Cees G M Snoek, Xinlei Chen: An Image is Worth More Than 16x16 Patches: Exploring Transformers on Individual Pixels. In: ICLR, 2025. @inproceedings{NguyenICLR2025,

title = {An Image is Worth More Than 16x16 Patches: Exploring Transformers on Individual Pixels},

author = {Duy-Kien Nguyen and Mahmoud Assran and Unnat Jain and Martin R Oswald and Cees G M Snoek and Xinlei Chen},

url = {https://arxiv.org/abs/2406.09415},

year = {2025},

date = {2025-04-24},

urldate = {2024-06-13},

booktitle = {ICLR},

abstract = {This work does not introduce a new method. Instead, we present an interesting finding that questions the necessity of the inductive bias -- locality in modern computer vision architectures. Concretely, we find that vanilla Transformers can operate by directly treating each individual pixel as a token and achieve highly performant results. This is substantially different from the popular design in Vision Transformer, which maintains the inductive bias from ConvNets towards local neighborhoods (e.g. by treating each 16x16 patch as a token). We mainly showcase the effectiveness of pixels-as-tokens across three well-studied tasks in computer vision: supervised learning for object classification, self-supervised learning via masked autoencoding, and image generation with diffusion models. Although directly operating on individual pixels is less computationally practical, we believe the community must be aware of this surprising piece of knowledge when devising the next generation of neural architectures for computer vision.},

howpublished = {arXiv:2406.09415},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

This work does not introduce a new method. Instead, we present an interesting finding that questions the necessity of the inductive bias -- locality in modern computer vision architectures. Concretely, we find that vanilla Transformers can operate by directly treating each individual pixel as a token and achieve highly performant results. This is substantially different from the popular design in Vision Transformer, which maintains the inductive bias from ConvNets towards local neighborhoods (e.g. by treating each 16x16 patch as a token). We mainly showcase the effectiveness of pixels-as-tokens across three well-studied tasks in computer vision: supervised learning for object classification, self-supervised learning via masked autoencoding, and image generation with diffusion models. Although directly operating on individual pixels is less computationally practical, we believe the community must be aware of this surprising piece of knowledge when devising the next generation of neural architectures for computer vision. |

| Ivona Najdenkoska, Mohammad Mahdi Derakhshani, Yuki M Asano, Nanne van Noord, Marcel Worring, Cees G M Snoek

: TULIP: Token-length Upgraded CLIP. In: ICLR, 2025. @inproceedings{NajdenkoskaICLR25,

title = {TULIP: Token-length Upgraded CLIP},

author = {Ivona Najdenkoska and Mohammad Mahdi Derakhshani and Yuki M Asano and Nanne van Noord and Marcel Worring and Cees G M Snoek

},

url = {https://arxiv.org/abs/2410.10034},

year = {2025},

date = {2025-04-24},

urldate = {2024-10-13},

booktitle = {ICLR},

abstract = {We address the challenge of representing long captions in vision-language models, such as CLIP. By design these models are limited by fixed, absolute positional encodings, restricting inputs to a maximum of 77 tokens and hindering performance on tasks requiring longer descriptions. Although recent work has attempted to overcome this limit, their proposed approaches struggle to model token relationships over longer distances and simply extend to a fixed new token length. Instead, we propose a generalizable method, named TULIP, able to upgrade the token length to any length for CLIP-like models. We do so by improving the architecture with relative position encodings, followed by a training procedure that (i) distills the original CLIP text encoder into an encoder with relative position encodings and (ii) enhances the model for aligning longer captions with images. By effectively encoding captions longer than the default 77 tokens, our model outperforms baselines on cross-modal tasks such as retrieval and text-to-image generation.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

We address the challenge of representing long captions in vision-language models, such as CLIP. By design these models are limited by fixed, absolute positional encodings, restricting inputs to a maximum of 77 tokens and hindering performance on tasks requiring longer descriptions. Although recent work has attempted to overcome this limit, their proposed approaches struggle to model token relationships over longer distances and simply extend to a fixed new token length. Instead, we propose a generalizable method, named TULIP, able to upgrade the token length to any length for CLIP-like models. We do so by improving the architecture with relative position encodings, followed by a training procedure that (i) distills the original CLIP text encoder into an encoder with relative position encodings and (ii) enhances the model for aligning longer captions with images. By effectively encoding captions longer than the default 77 tokens, our model outperforms baselines on cross-modal tasks such as retrieval and text-to-image generation. |